1. OptaPlanner introduction

1.1. What is OptaPlanner?

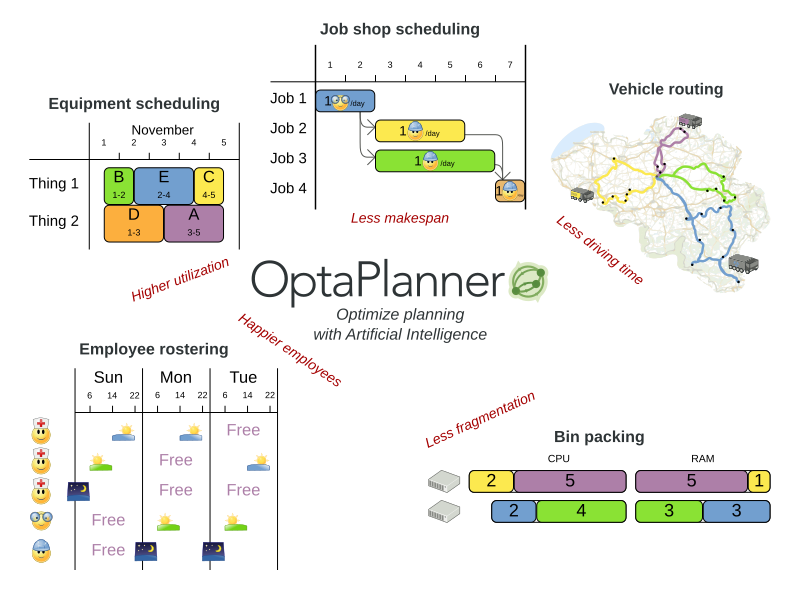

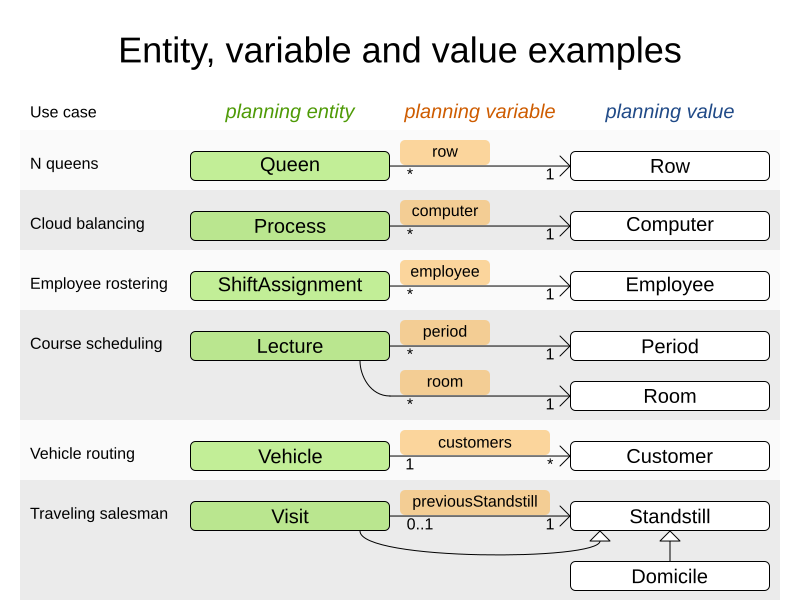

Every organization faces planning problems: providing products or services with a limited set of constrained resources (employees, assets, time and money). OptaPlanner optimizes such planning to do more business with less resources. This is known as Constraint Satisfaction Programming (which is part of the Operations Research discipline).

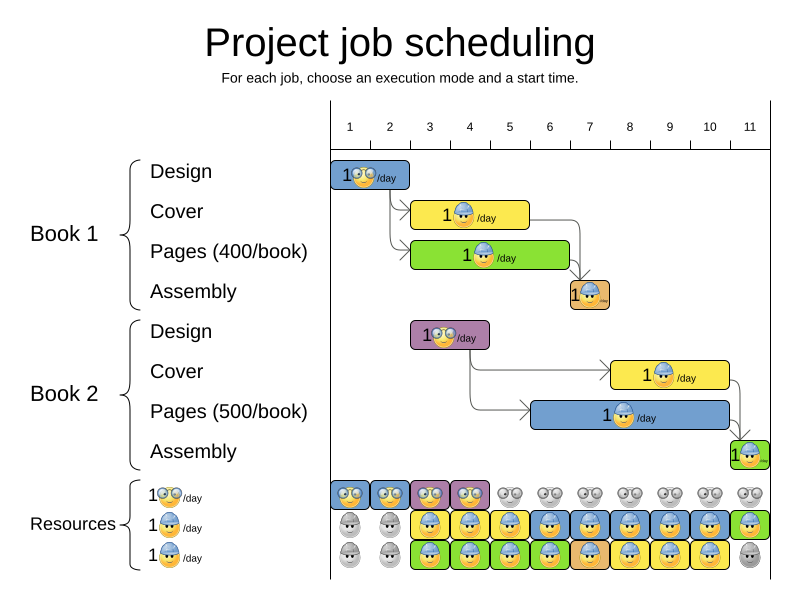

OptaPlanner is a lightweight, embeddable constraint satisfaction engine which optimizes planning problems. It solves use cases such as:

-

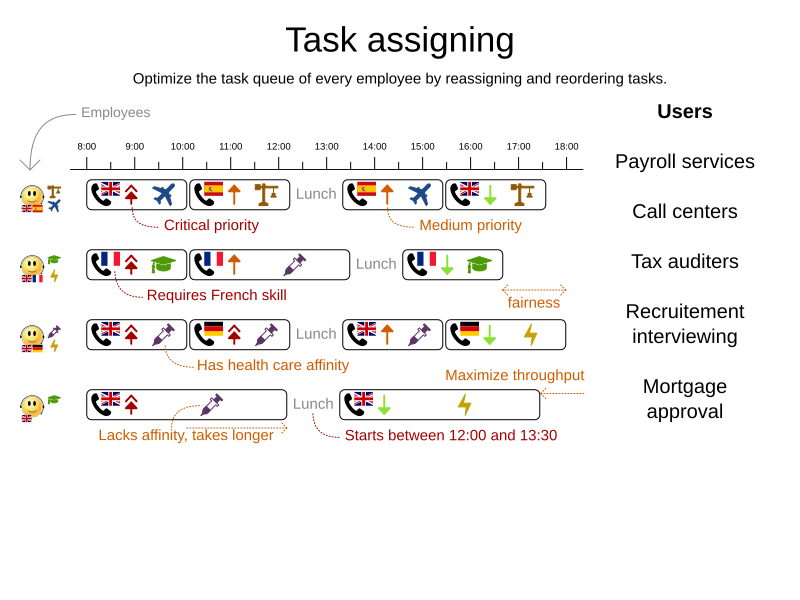

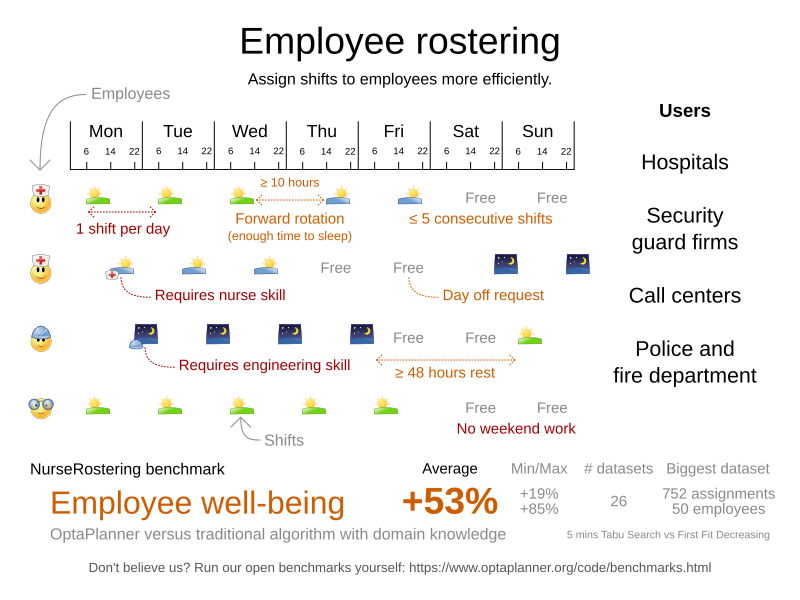

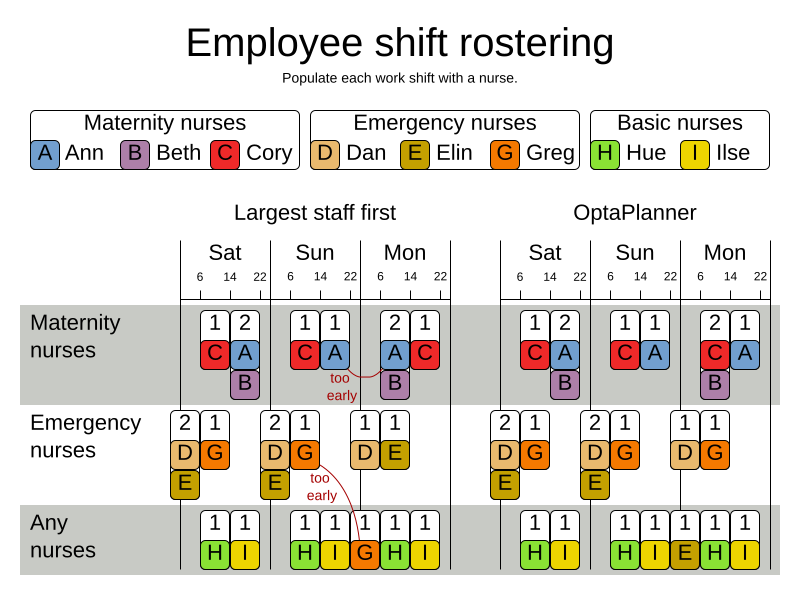

Employee shift rostering: timetabling nurses, repairmen, …

-

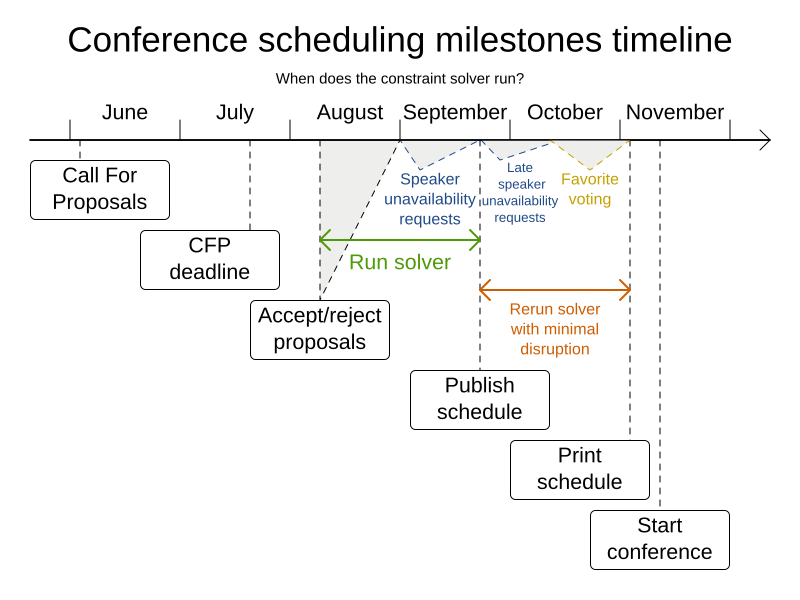

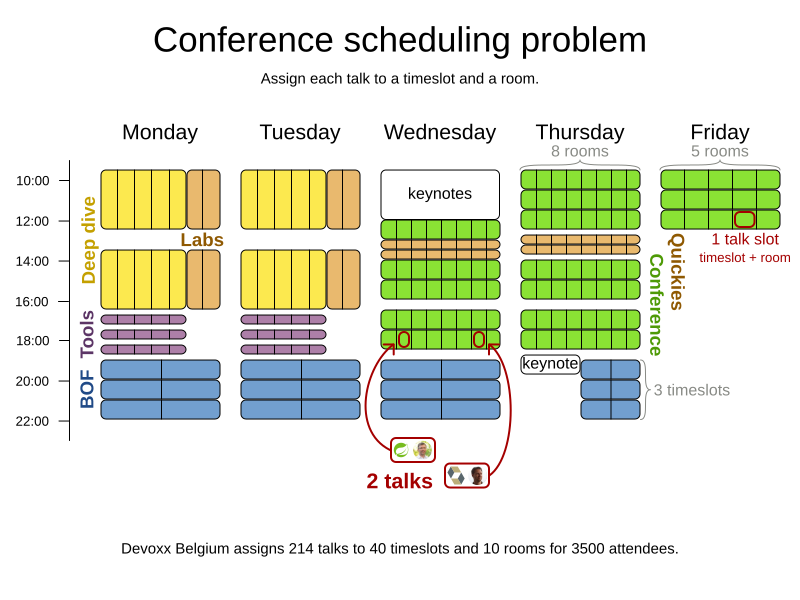

Agenda scheduling: scheduling meetings, appointments, maintenance jobs, advertisements, …

-

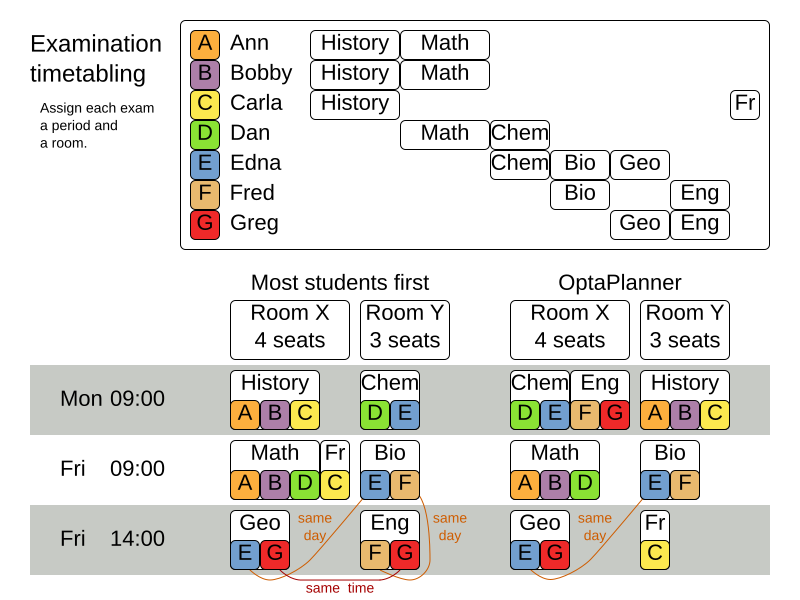

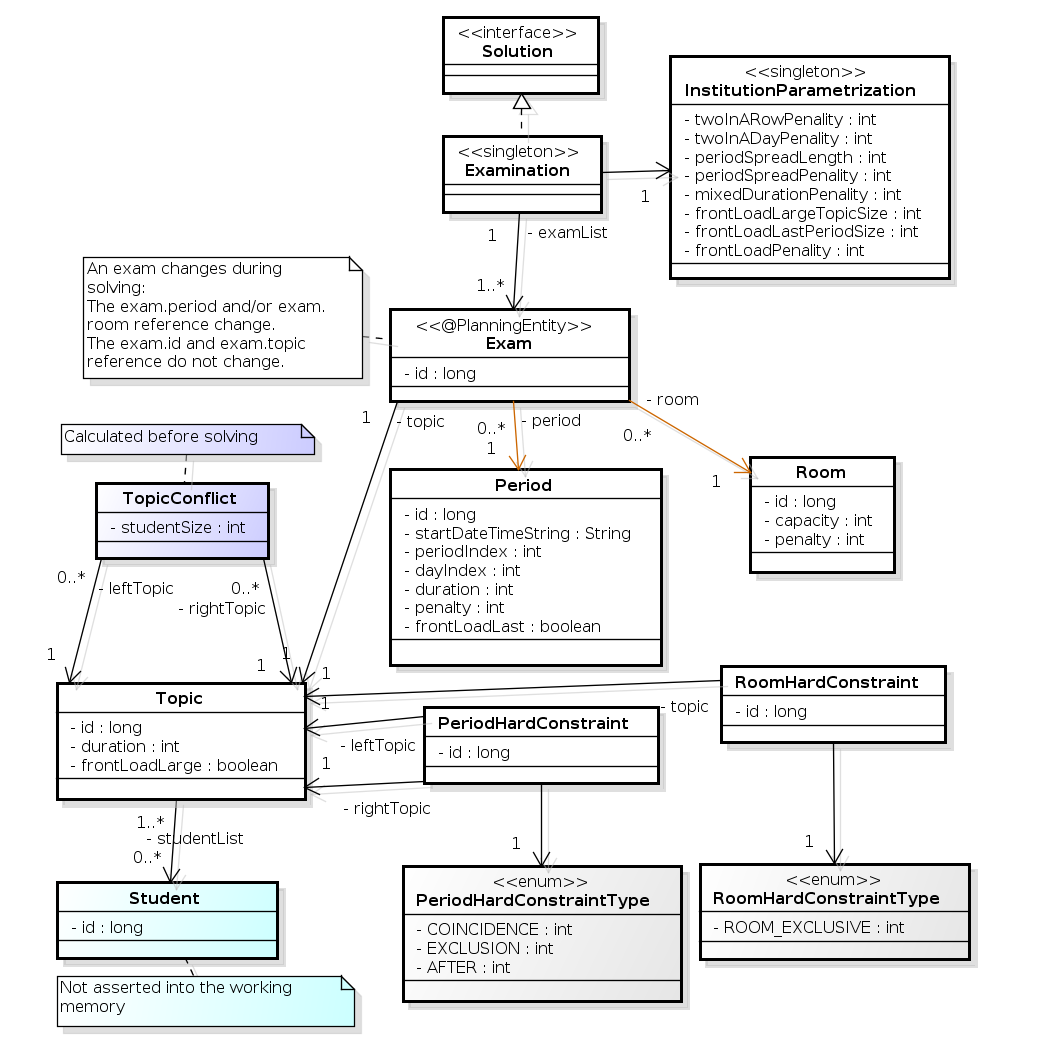

Educational timetabling: scheduling lessons, courses, exams, conference presentations, …

-

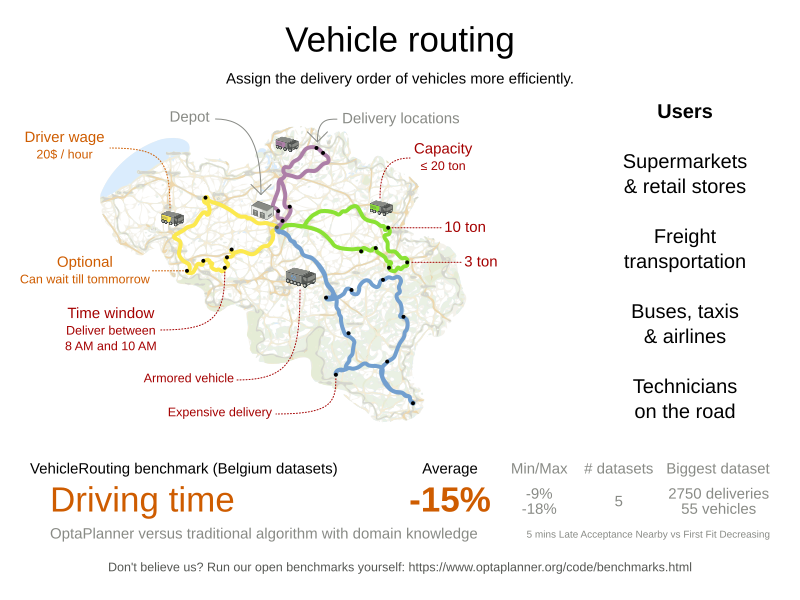

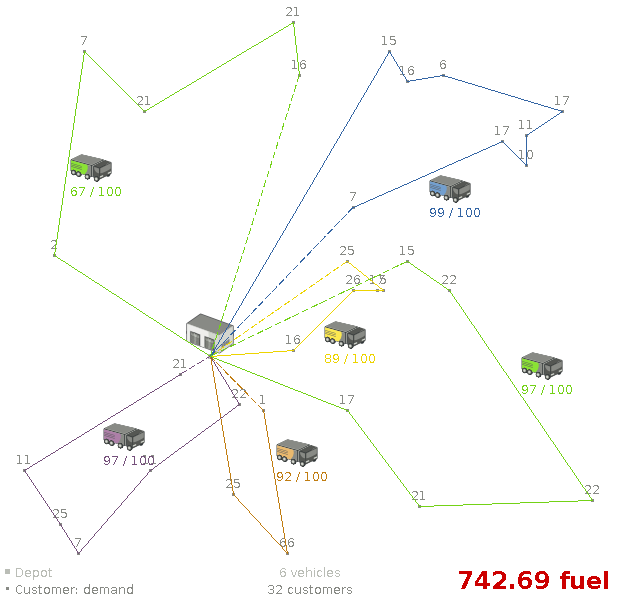

Vehicle routing: planning vehicle routes (trucks, trains, boats, airplanes, …) for moving freight and/or passengers through multiple destinations using known mapping tools …

-

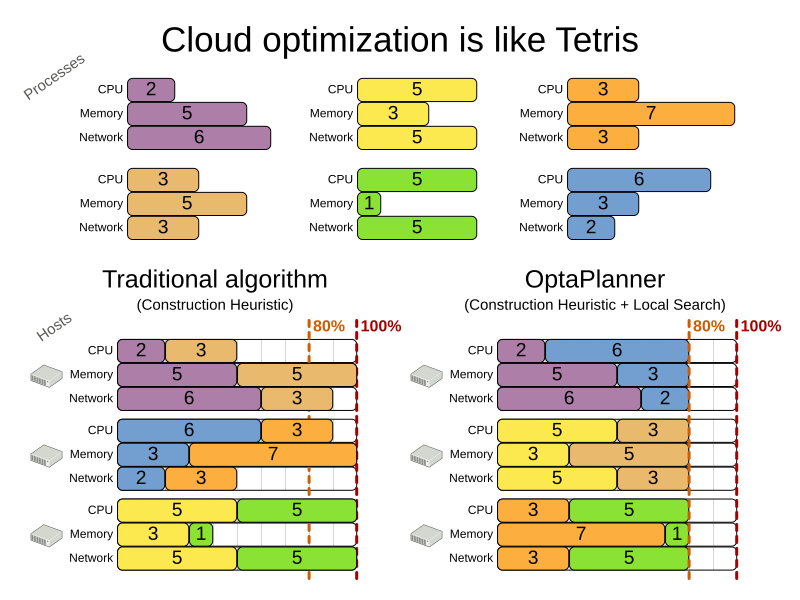

Bin packing: filling containers, trucks, ships, and storage warehouses with items, but also packing information across computer resources, as in cloud computing …

-

Job shop scheduling: planning car assembly lines, machine queue planning, workforce task planning, …

-

Cutting stock: minimizing waste while cutting paper, steel, carpet, …

-

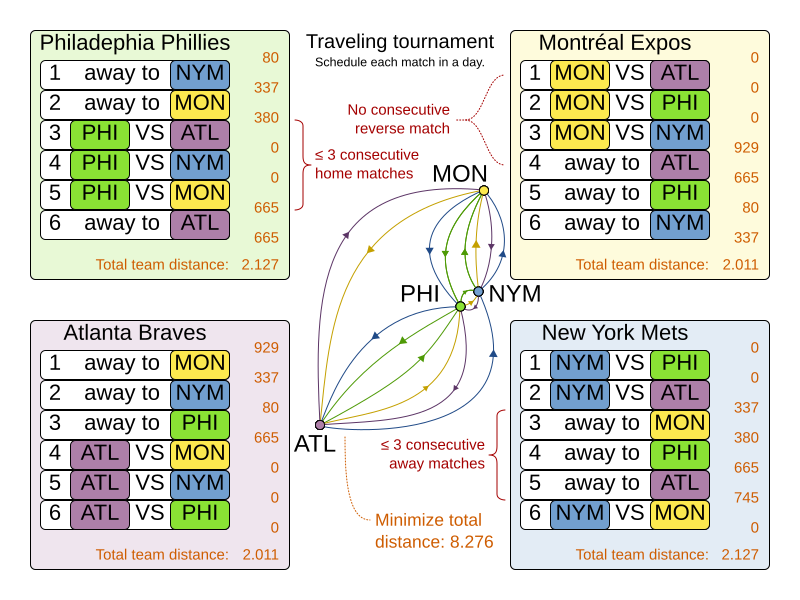

Sport scheduling: planning games and training schedules for football leagues, baseball leagues, …

-

Financial optimization: investment portfolio optimization, risk spreading, …

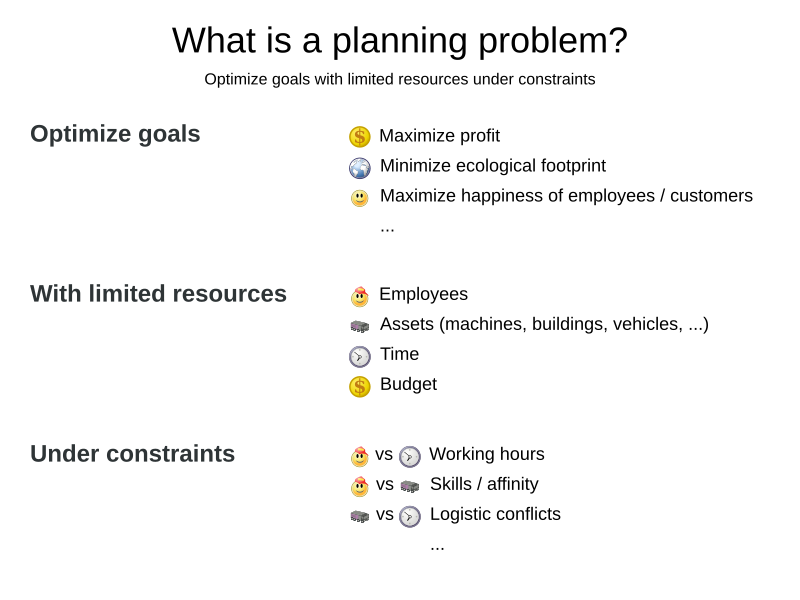

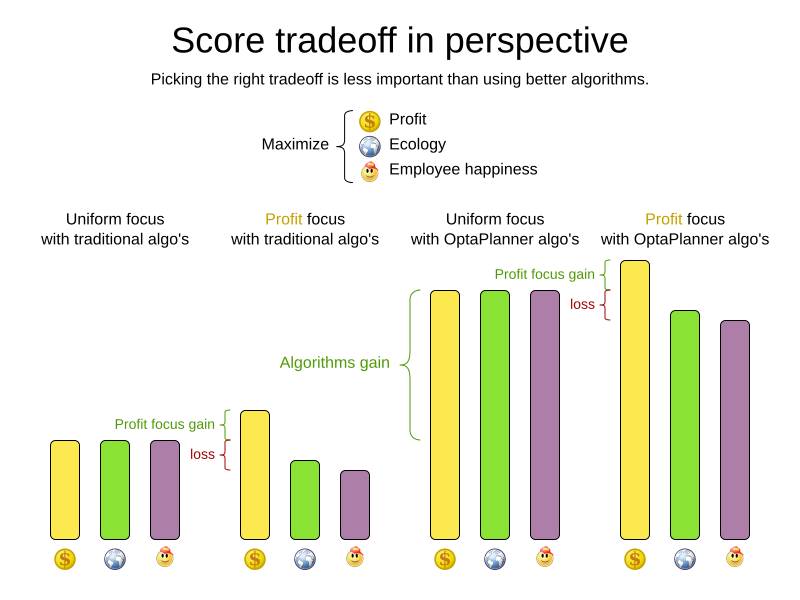

1.2. What is a planning problem?

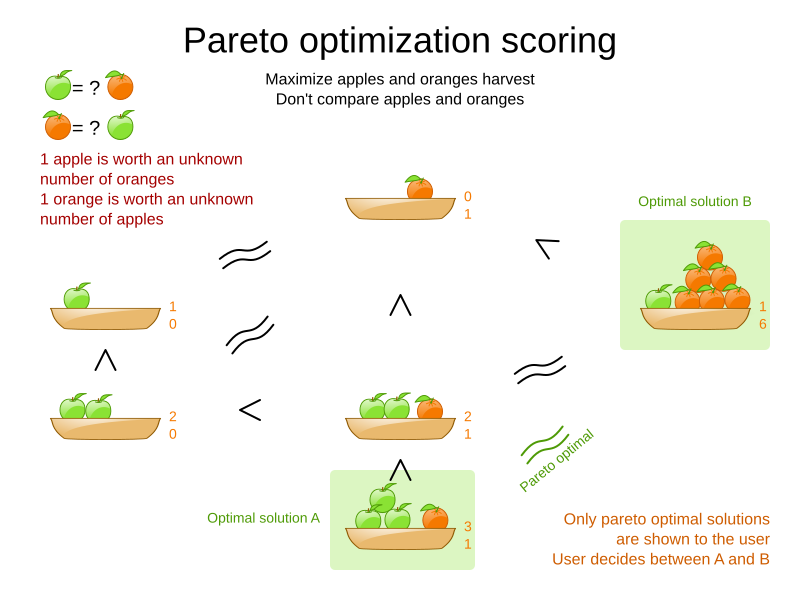

A planning problem has an optimal goal, based on limited resources and under specific constraints. Optimal goals can be any number of things, such as:

-

Maximized profits - the optimal goal results in the highest possible profit.

-

Minimized ecological footprint - the optimal goal has the least amount of environmental impact.

-

Maximized satisfaction for employees or customers - the optimal goal prioritizes the needs of employees or customers.

The ability to achieve these goals relies on the number of resources available, such as:

-

The number of people.

-

Amount of time.

-

Budget.

-

Physical assets, for example, machinery, vehicles, computers, buildings, etc.

Specific constraints related to these resources must also be taken into account, such as the number of hours a person works, their ability to use certain machines, or compatibility between pieces of equipment.

OptaPlanner helps JavaTM programmers solve constraint satisfaction problems efficiently. Under the hood, it combines optimization heuristics and metaheuristics with very efficient score calculation.

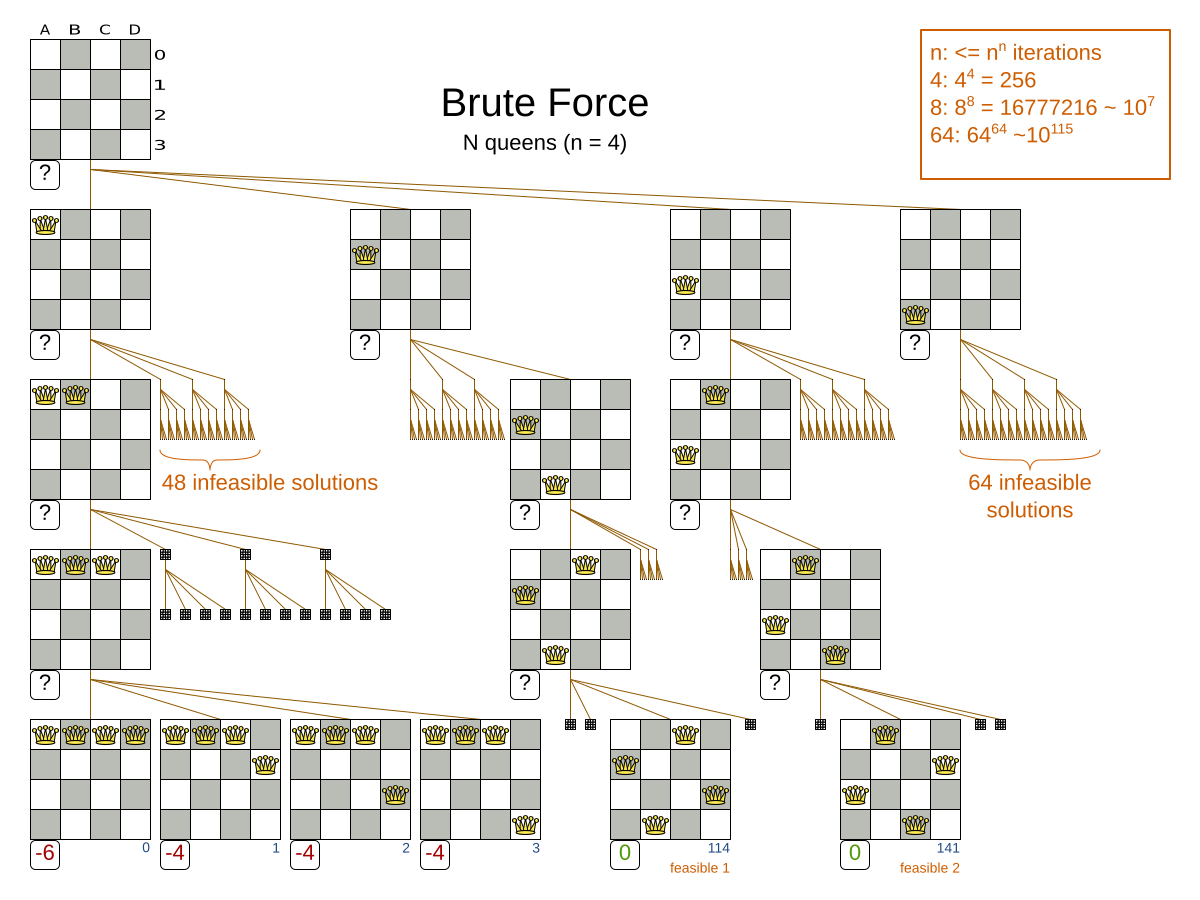

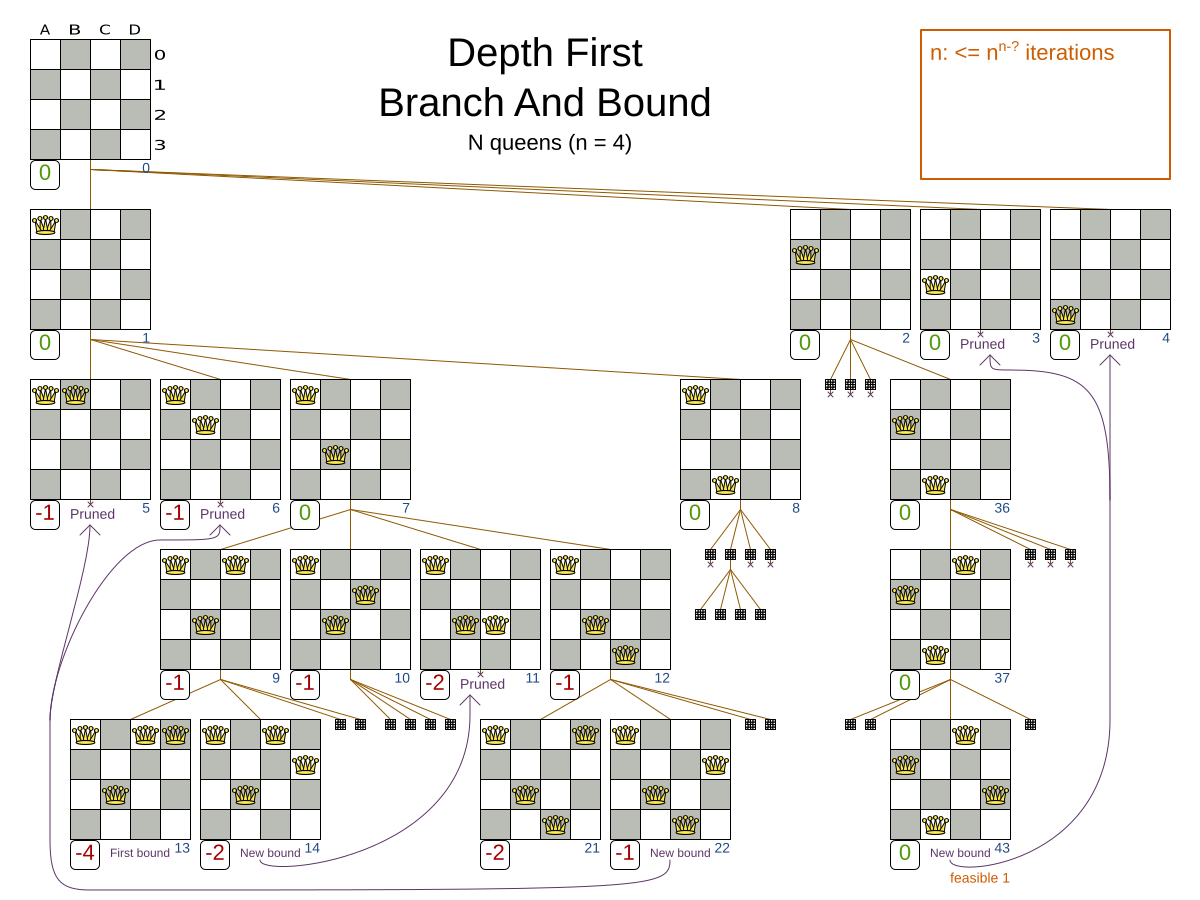

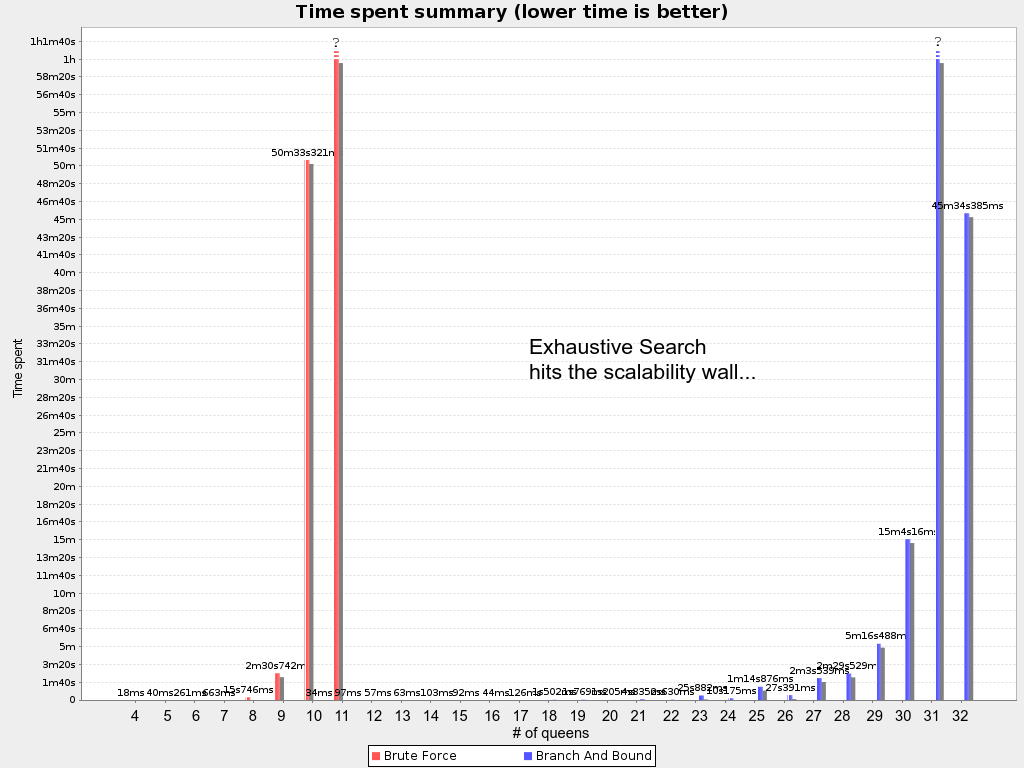

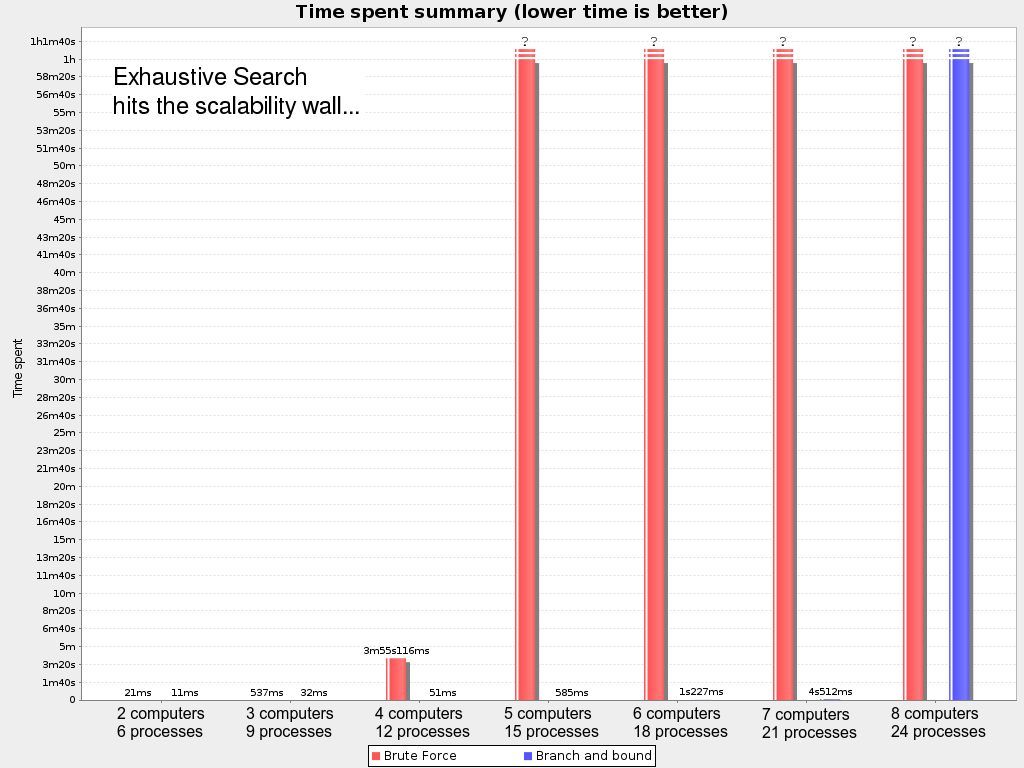

1.2.1. A planning problem is NP-complete or NP-hard

All the use cases above are probably NP-complete/NP-hard, which means in layman’s terms:

-

It’s easy to verify a given solution to a problem in reasonable time.

-

There is no silver bullet to find the optimal solution of a problem in reasonable time (*).

|

(*) At least, none of the smartest computer scientists in the world have found such a silver bullet yet. But if they find one for 1 NP-complete problem, it will work for every NP-complete problem. In fact, there’s a $ 1,000,000 reward for anyone that proves if such a silver bullet actually exists or not. |

The implication of this is pretty dire: solving your problem is probably harder than you anticipated, because the two common techniques won’t suffice:

-

A Brute Force algorithm (even a smarter variant) will take too long.

-

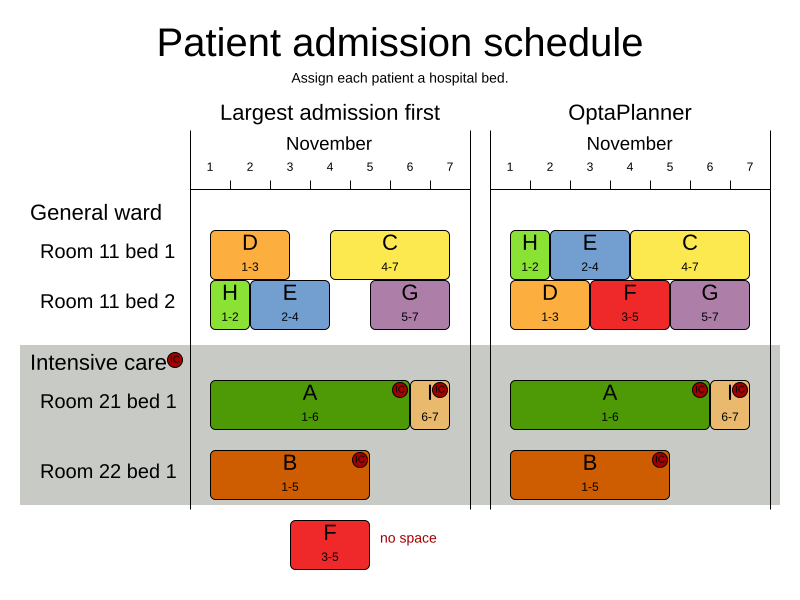

A quick algorithm, for example in bin packing, putting in the largest items first, will return a solution that is far from optimal.

By using advanced optimization algorithms, OptaPlanner does find a near-optimal solution in reasonable time for such planning problems.

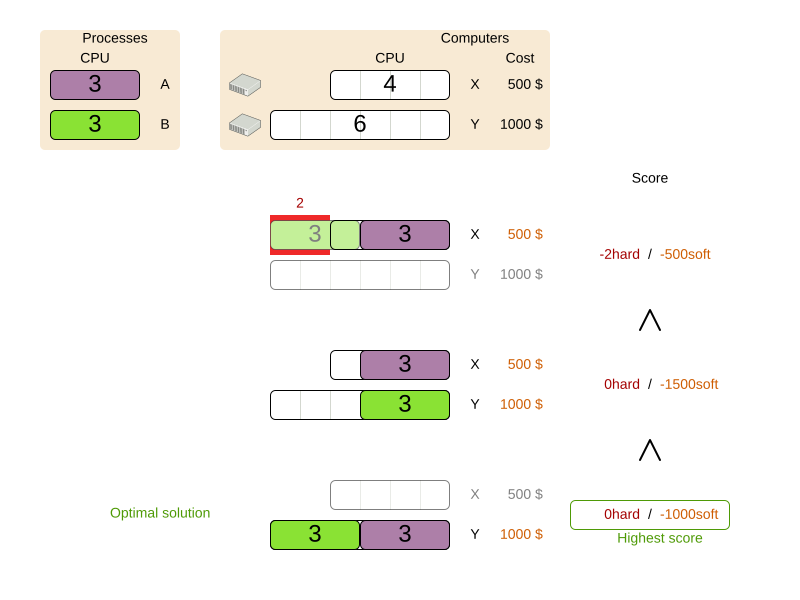

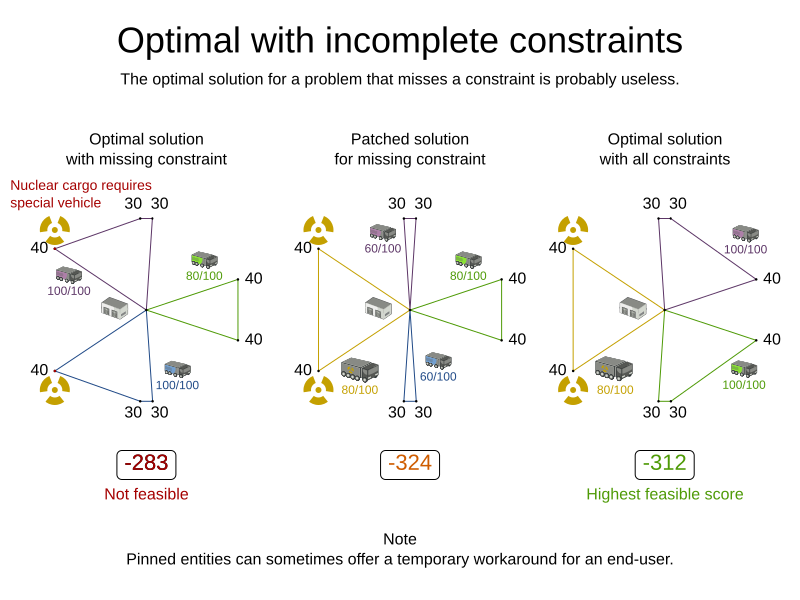

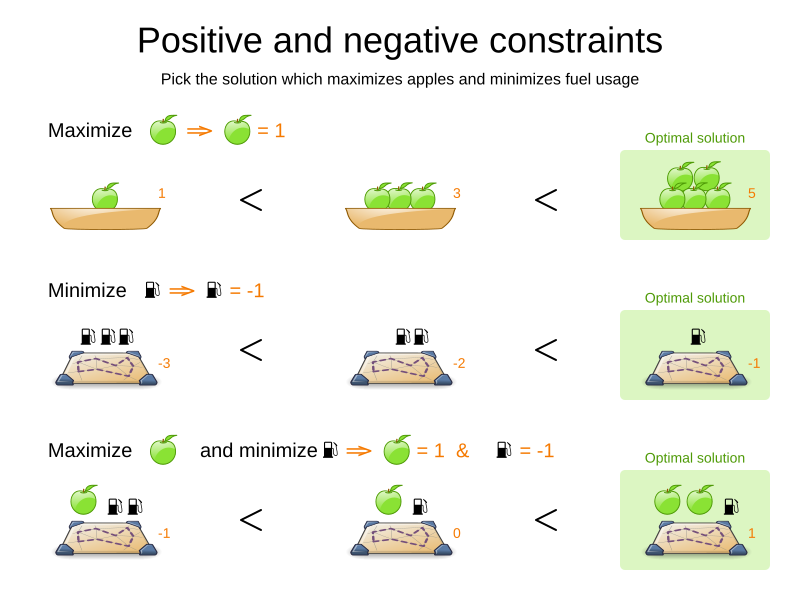

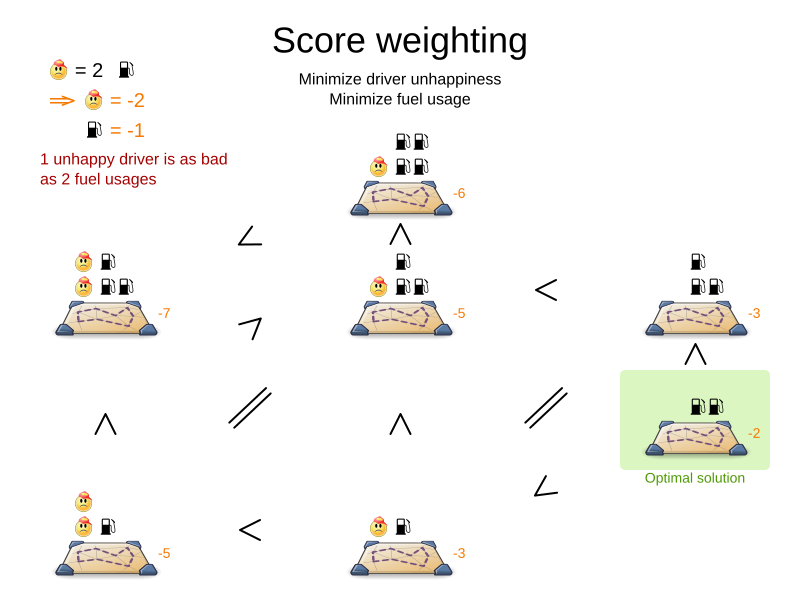

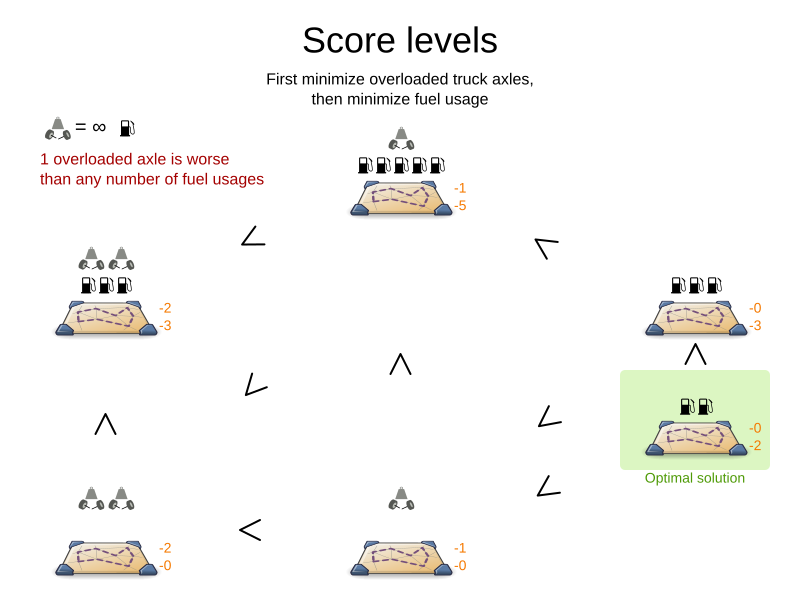

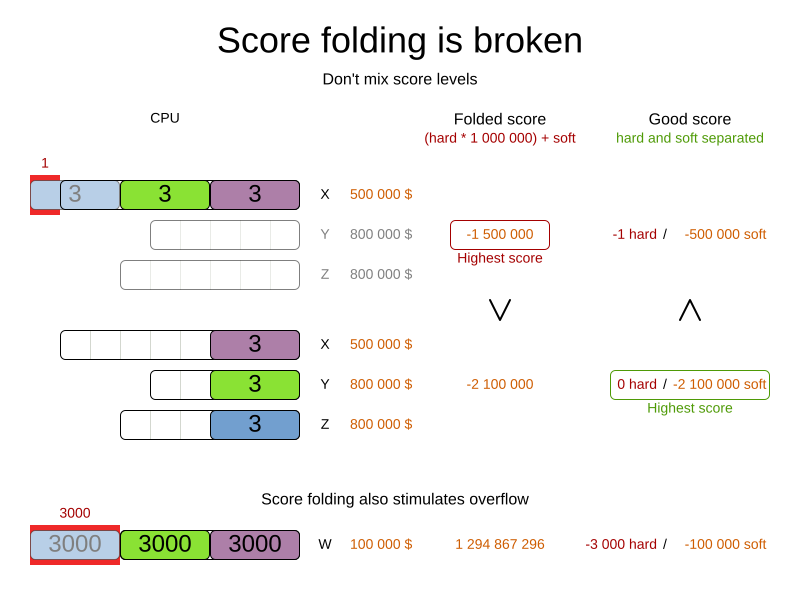

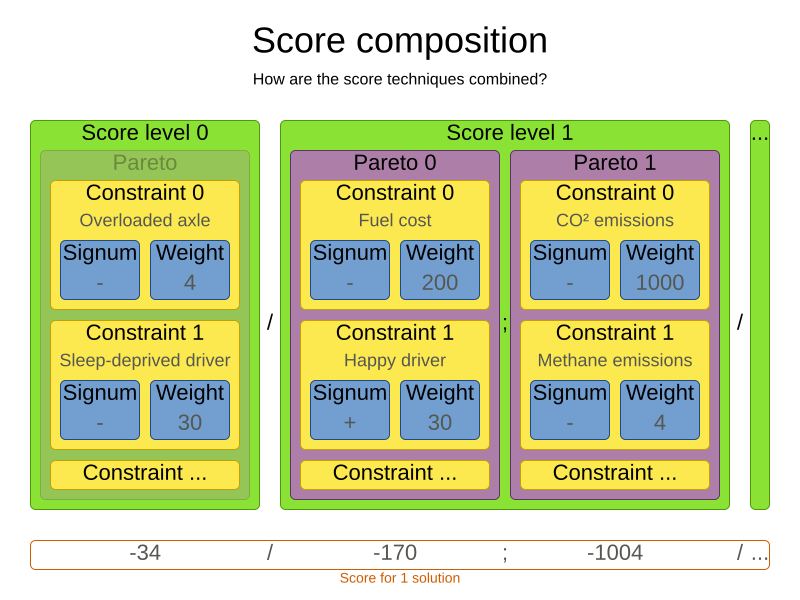

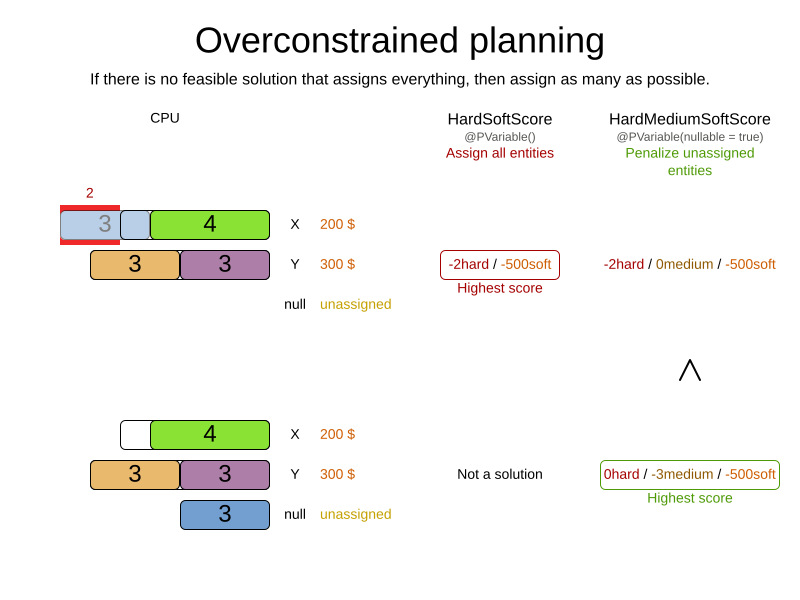

1.2.2. A planning problem has (hard and soft) constraints

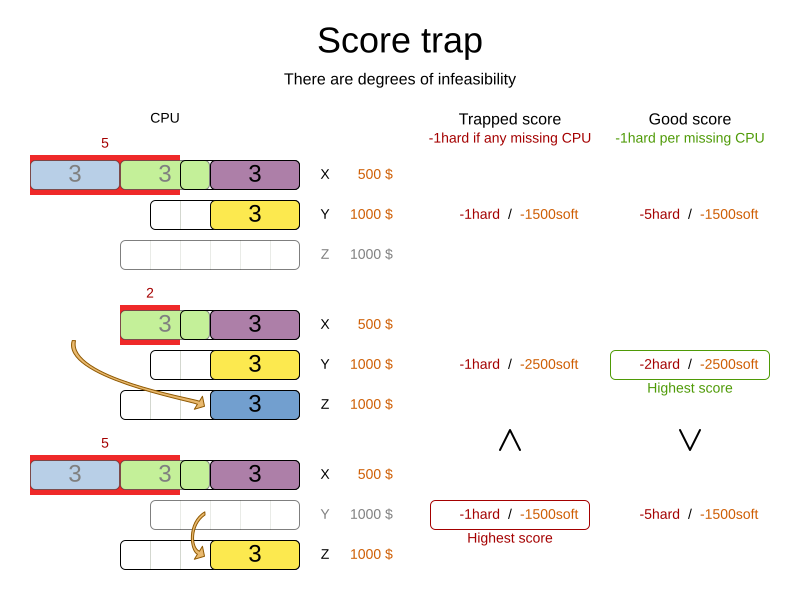

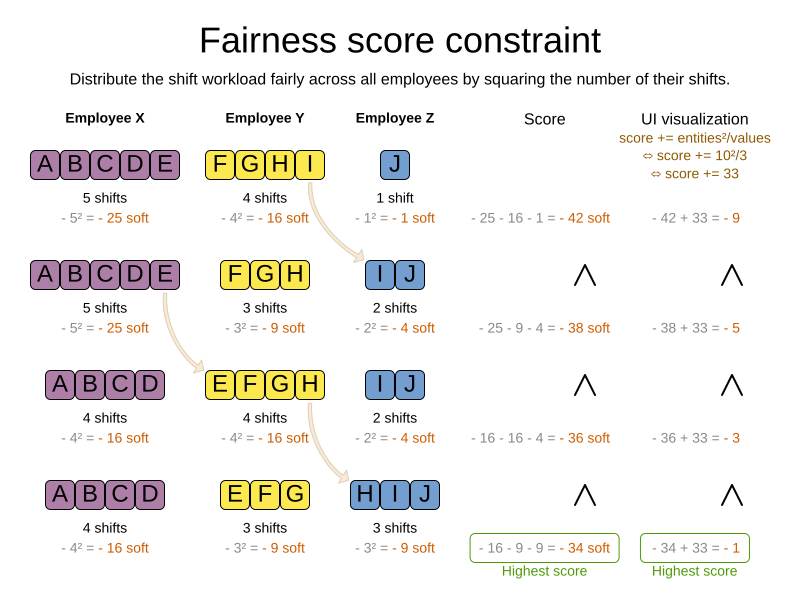

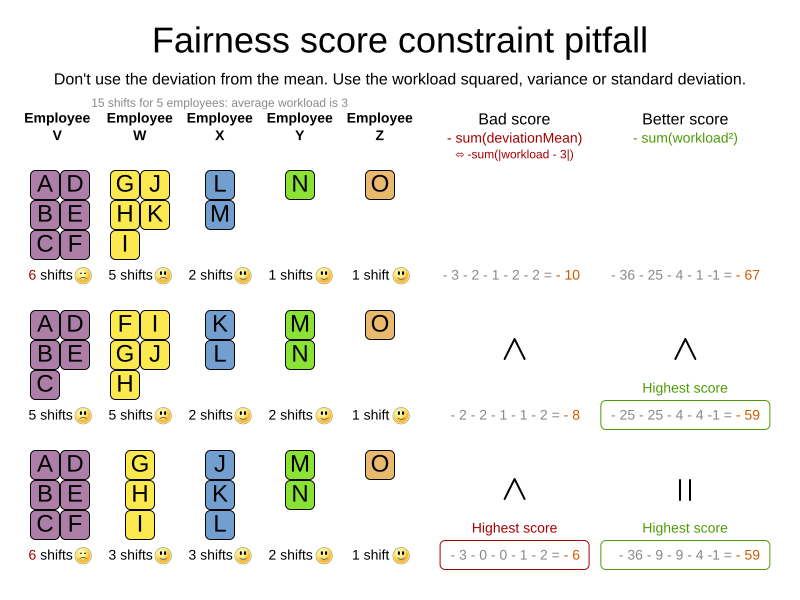

Usually, a planning problem has at least two levels of constraints:

-

A (negative) hard constraint must not be broken. For example: 1 teacher cannot teach 2 different lessons at the same time.

-

A (negative) soft constraint should not be broken if it can be avoided. For example: Teacher A does not like to teach on Friday afternoon.

Some problems have positive constraints too:

-

A positive soft constraint (or reward) should be fulfilled if possible. For example: Teacher B likes to teach on Monday morning.

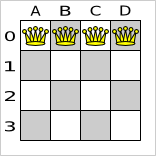

Some basic problems (such as N queens) only have hard constraints. Some problems have three or more levels of constraints, for example hard, medium and soft constraints.

These constraints define the score calculation (AKA fitness function) of a planning problem. Each solution of a planning problem can be graded with a score. With OptaPlanner, score constraints are written in an Object Oriented language, such as JavaTM code. Such code is easy, flexible and scalable.

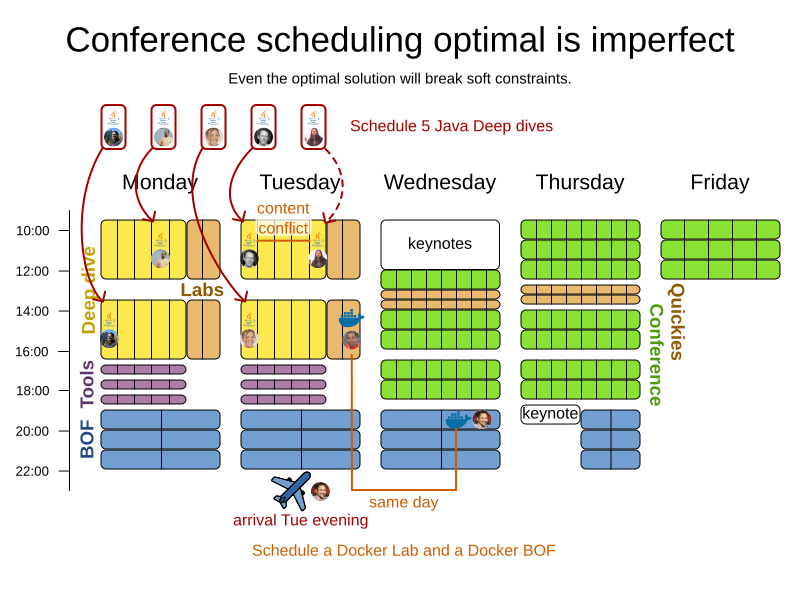

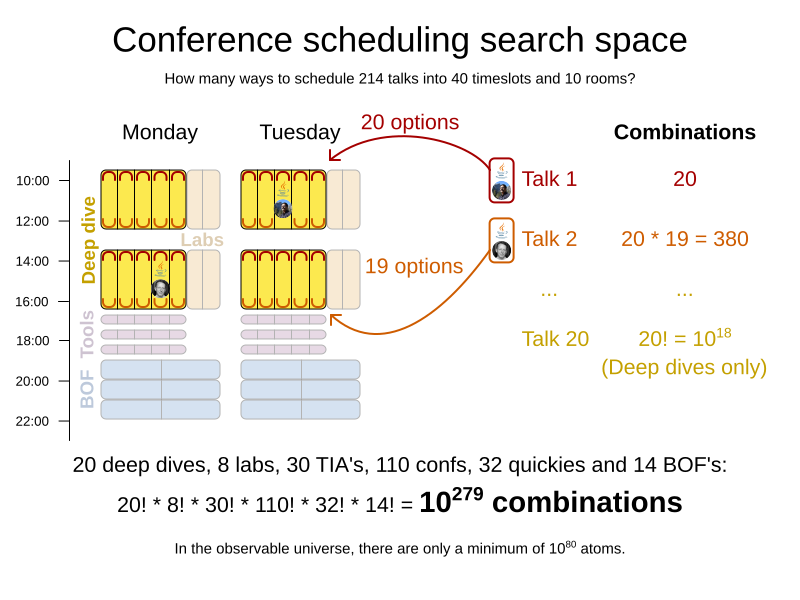

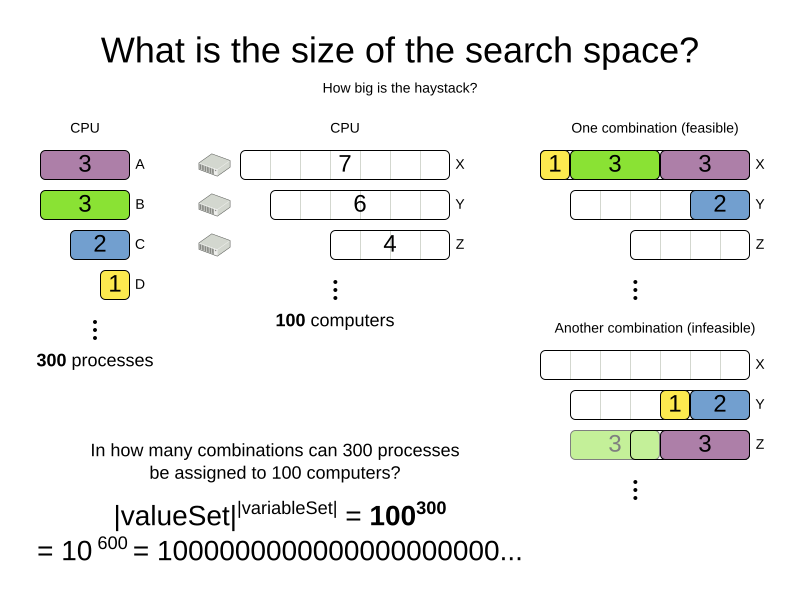

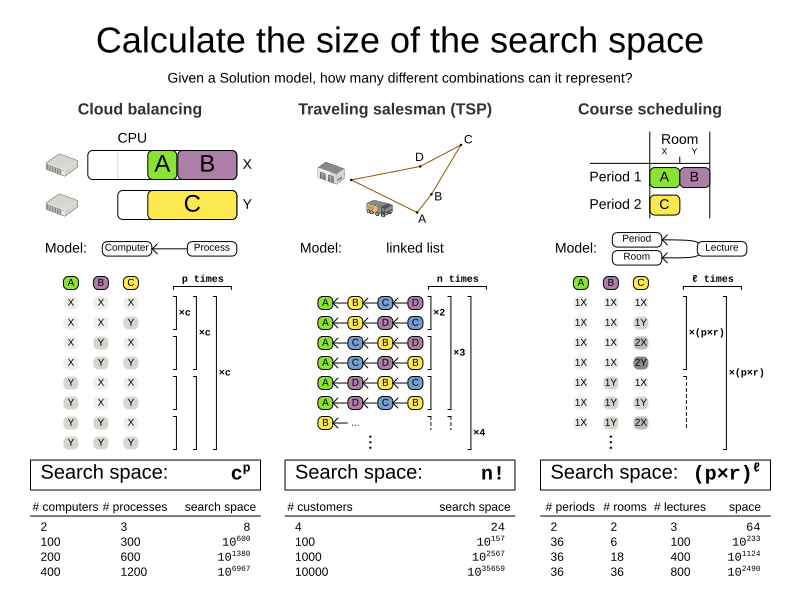

1.2.3. A planning problem has a huge search space

A planning problem has a number of solutions. There are several categories of solutions:

-

A possible solution is any solution, whether or not it breaks any number of constraints. Planning problems tend to have an incredibly large number of possible solutions. Many of those solutions are worthless.

-

A feasible solution is a solution that does not break any (negative) hard constraints. The number of feasible solutions tends to be relative to the number of possible solutions. Sometimes there are no feasible solutions. Every feasible solution is a possible solution.

-

An optimal solution is a solution with the highest score. Planning problems tend to have 1 or a few optimal solutions. There is always at least 1 optimal solution, even in the case that there are no feasible solutions and the optimal solution isn’t feasible.

-

The best solution found is the solution with the highest score found by an implementation in a given amount of time. The best solution found is likely to be feasible and, given enough time, it’s an optimal solution.

Counterintuitively, the number of possible solutions is huge (if calculated correctly), even with a small dataset. As you can see in the examples, most instances have a lot more possible solutions than the minimal number of atoms in the known universe (10^80). Because there is no silver bullet to find the optimal solution, any implementation is forced to evaluate at least a subset of all those possible solutions.

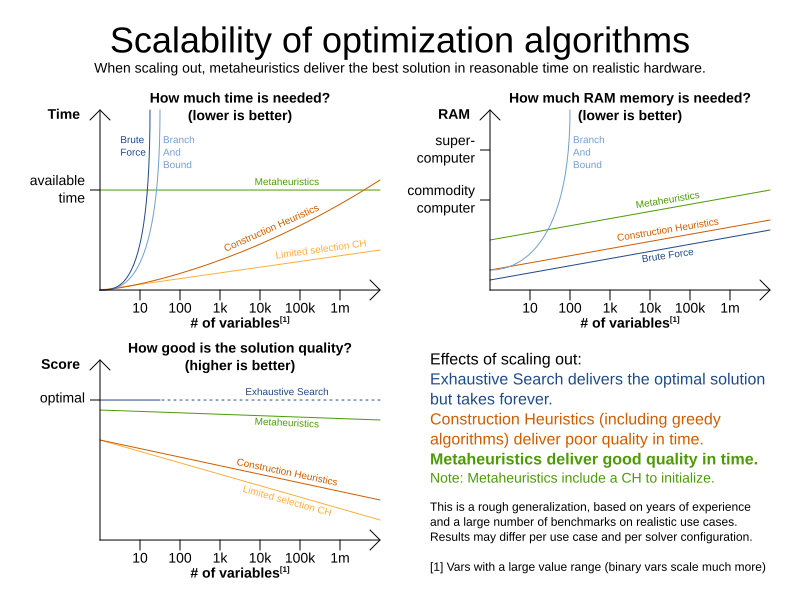

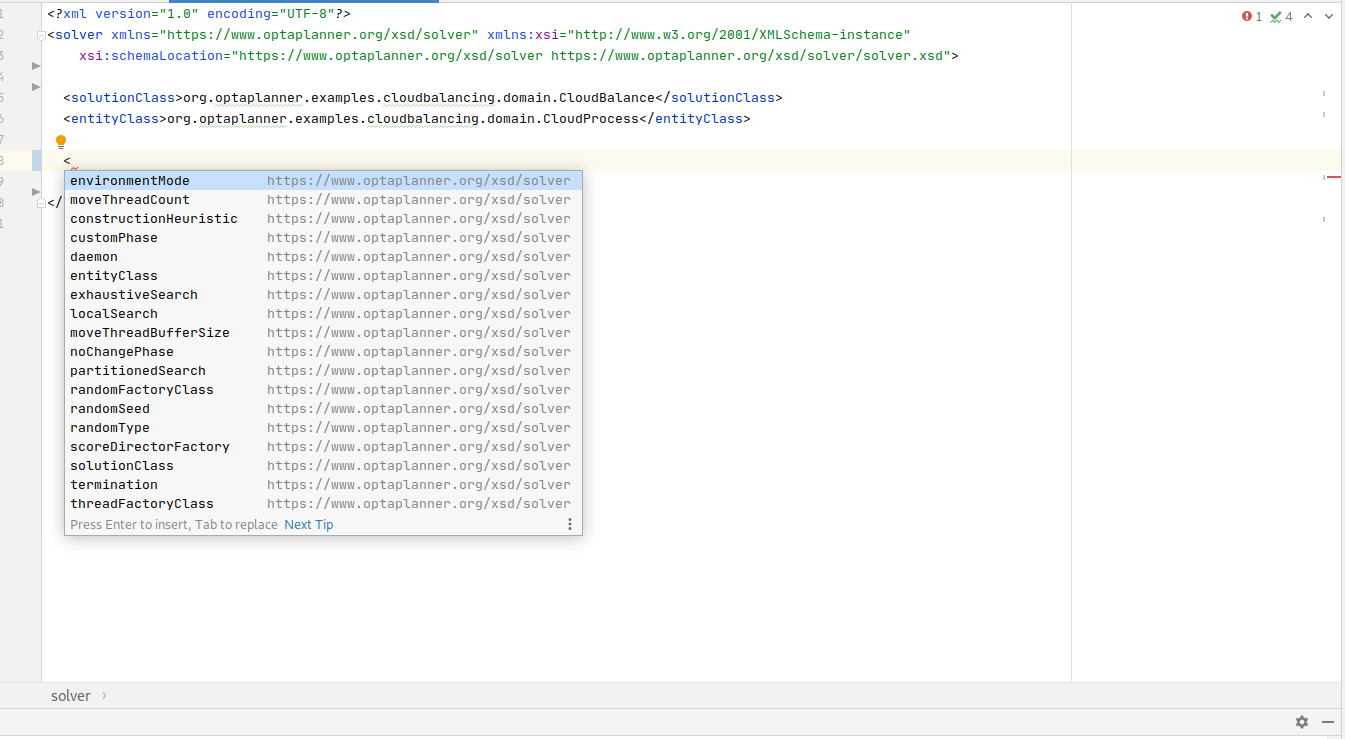

OptaPlanner supports several optimization algorithms to efficiently wade through that incredibly large number of possible solutions. Depending on the use case, some optimization algorithms perform better than others, but it’s impossible to tell in advance. With OptaPlanner, it is easy to switch the optimization algorithm, by changing the solver configuration in a few lines of XML or code.

1.3. Requirements

OptaPlanner is open source software, released under the Apache License 2.0. This license is very liberal and allows reuse for commercial purposes. Read the layman’s explanation.

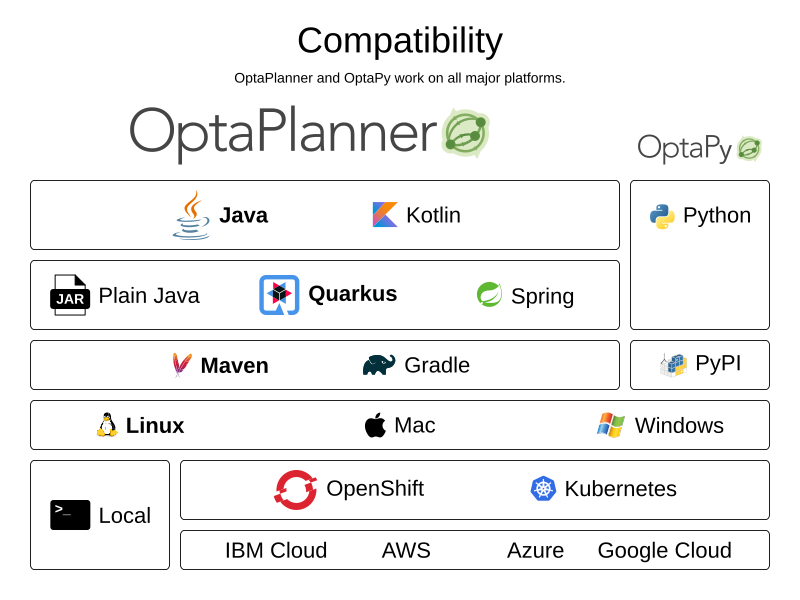

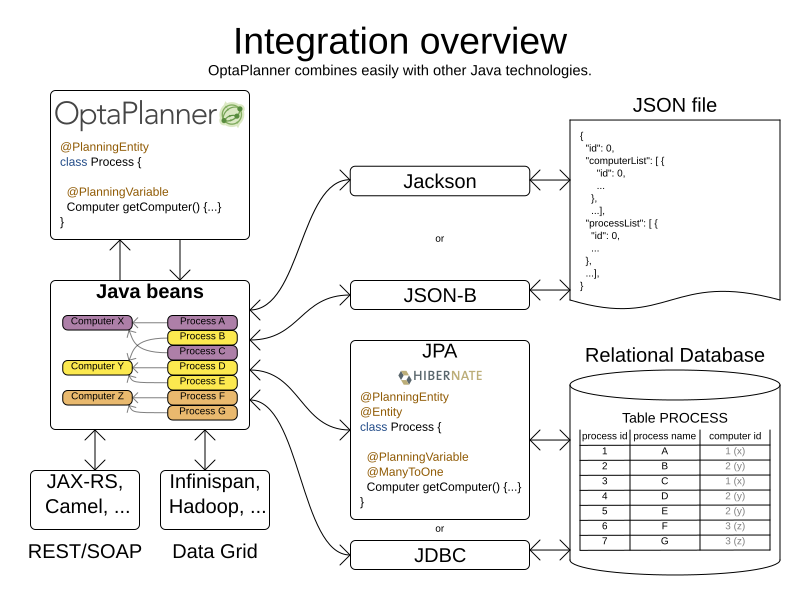

OptaPlanner is 100% pure JavaTM and runs on Java 11 or higher. It integrates very easily with other JavaTM technologies. OptaPlanner is available in the Maven Central Repository.

OptaPlanner works on any Java Virtual Machine and is compatible with the major JVM languages and all major platforms.

1.4. Governance

1.4.1. Status of OptaPlanner

OptaPlanner is stable, reliable and scalable. It has been heavily tested with unit, integration, and stress tests, and is used in production throughout the world. One example handles over 50 000 variables with 5000 values each, multiple constraint types and billions of possible constraint matches.

See Release notes for an overview of recent activity in the project.

1.4.2. Backwards compatibility

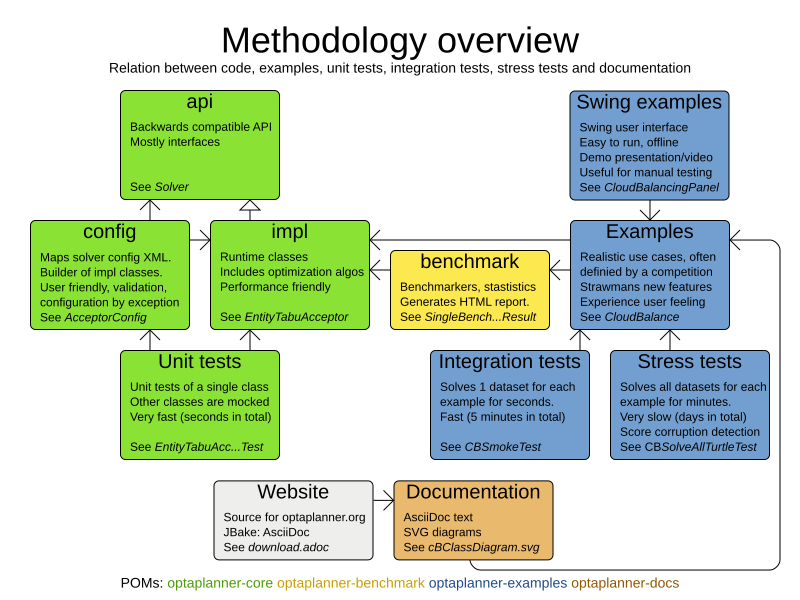

OptaPlanner separates its API and implementation:

-

Public API: All classes in the package namespace org.optaplanner.core.api, org.optaplanner.benchmark.api, org.optaplanner.test.api and org.optaplanner.persistence…api are 100% backwards compatible in future releases (especially minor and hotfix releases). In rare circumstances, if the major version number changes, a few specific classes might have a few backwards incompatible changes, but those will be clearly documented in the upgrade recipe.

-

XML configuration: The XML solver configuration is backwards compatible for all elements, except for elements that require the use of non-public API classes. The XML solver configuration is defined by the classes in the package namespace org.optaplanner.core.config and org.optaplanner.benchmark.config.

-

Implementation classes: All other classes are not backwards compatible. They will change in future major or minor releases (but probably not in hotfix releases). The upgrade recipe describes every such relevant change and on how to quickly deal with it when upgrading to a newer version.

|

This documentation covers some |

1.4.3. Community and support

For news and articles, check our blog,

twitter (including Geoffrey’s twitter)

and facebook.

If you’re happy with OptaPlanner, make us happy by posting a tweet or blog article about it.

Public questions are welcome on here. Bugs and feature requests are welcome in our issue tracker. Pull requests are very welcome on GitHub and get priority treatment! By open sourcing your improvements, you’ll benefit from our peer review and from our improvements made on top of your improvements.

Red Hat sponsors OptaPlanner development by employing the core team. For enterprise support and consulting, take a look at these services.

1.4.4. Relationship with KIE

OptaPlanner is part of the KIE group of projects. It releases regularly (typically every 3 weeks) together.

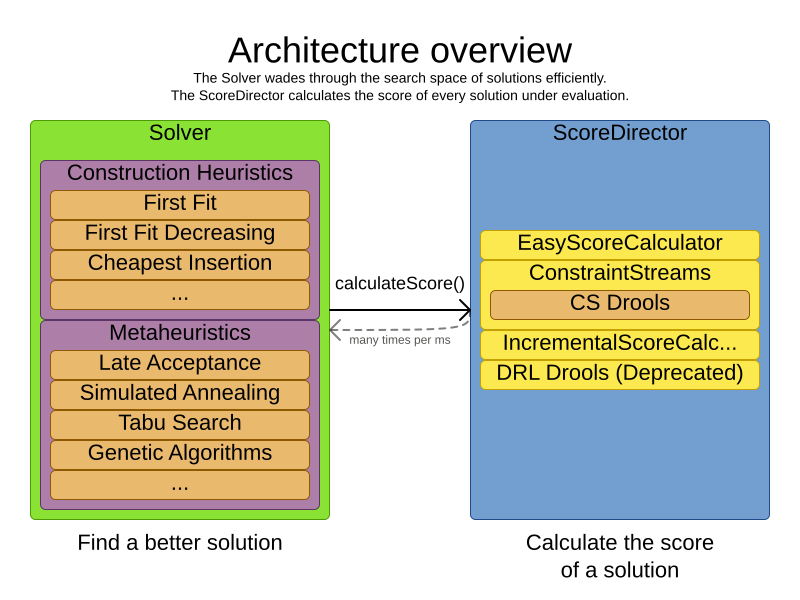

See the architecture overview to learn more about the optional integration with Drools.

1.5. Download and run the examples

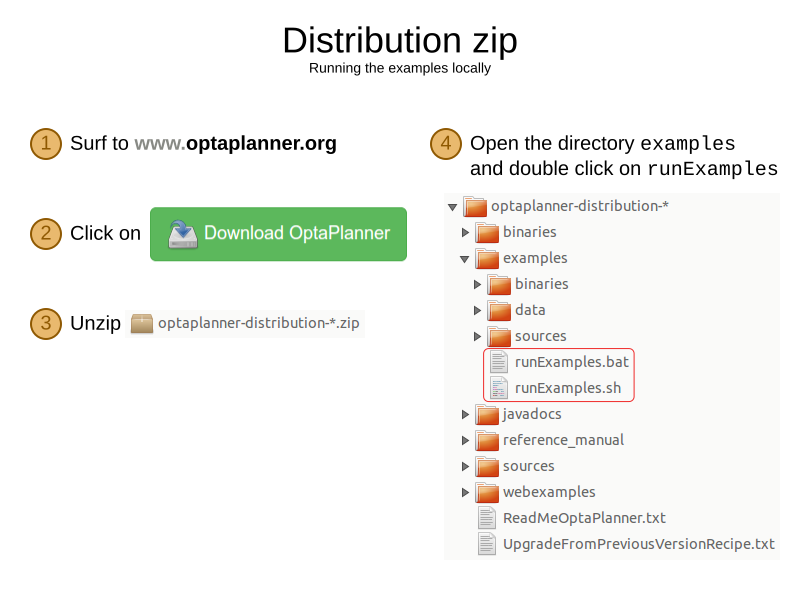

1.5.1. Get the release ZIP and run the examples

To try it now:

-

Download a release zip of OptaPlanner from the OptaPlanner website and unzip it.

-

Open the directory examples and run the script.

Linux or Mac:

$ cd examples $ ./runExamples.shWindows:

$ cd examples $ runExamples.bat

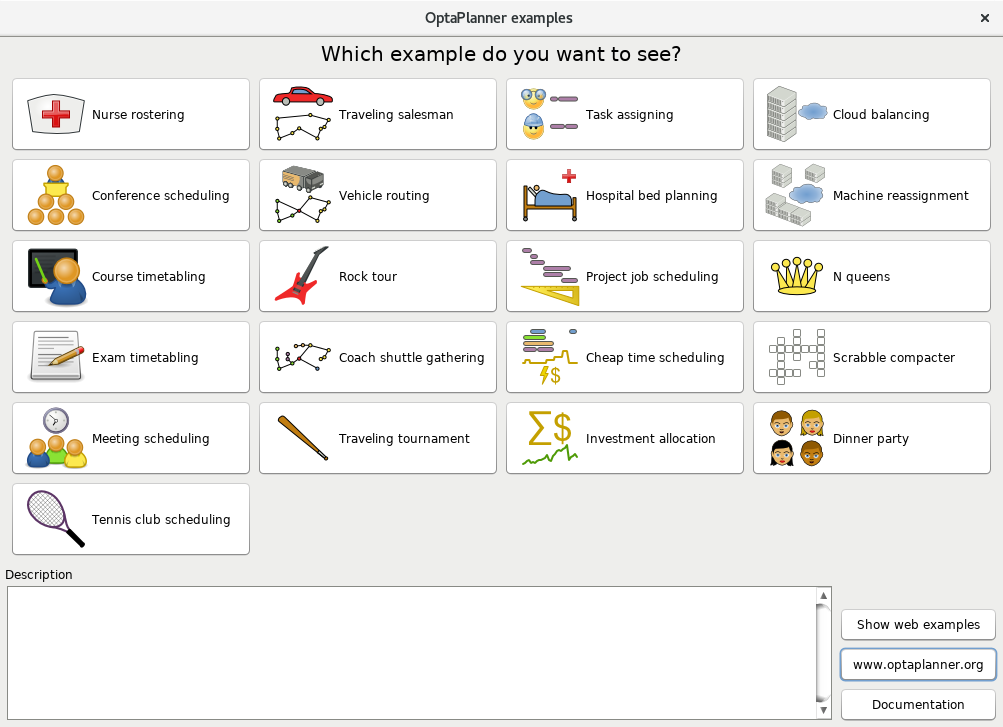

The Examples GUI application will open. Pick an example to try it out:

|

OptaPlanner itself has no GUI dependencies. It runs just as well on a server or a mobile JVM as it does on the desktop. |

1.5.2. Run the examples in an IDE

To run the examples in IntelliJ IDEA, VSCode or Eclipse:

-

Open the file examples/sources/pom.xml as a new project, the maven integration will take care of the rest.

-

Run the examples from the project.

1.5.3. Use OptaPlanner with Maven, Gradle, or ANT

The OptaPlanner jars are available in the central maven repository (and the snapshots in the JBoss maven repository).

If you use Maven, add a dependency to optaplanner-core in your pom.xml:

<dependency>

<groupId>org.optaplanner</groupId>

<artifactId>optaplanner-core</artifactId>

<version>...</version>

</dependency>Or better yet, import the optaplanner-bom in dependencyManagement to avoid duplicating version numbers

when adding other optaplanner dependencies later on:

<project>

...

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.optaplanner</groupId>

<artifactId>optaplanner-bom</artifactId>

<type>pom</type>

<version>...</version>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<dependencies>

<dependency>

<groupId>org.optaplanner</groupId>

<artifactId>optaplanner-core</artifactId>

</dependency>

<dependency>

<groupId>org.optaplanner</groupId>

<artifactId>optaplanner-persistence-jpa</artifactId>

</dependency>

...

</dependencies>

</project>If you use Gradle, add a dependency to optaplanner-core in your build.gradle:

dependencies {

implementation 'org.optaplanner:optaplanner-core:...'

}If you’re still using ANT, copy all the jars from the download zip’s binaries directory in your classpath.

|

The download zip’s Check the maven repository |

1.5.4. Build OptaPlanner from source

Prerequisites

Build and run the examples from source.

-

Clone

optaplannerfrom GitHub (or alternatively, download the zipball):$ git clone https://github.com/kiegroup/optaplanner.git ... -

Build it with Maven:

$ cd optaplanner $ mvn clean install -DskipTests ...The first time, Maven might take a long time, because it needs to download jars.

-

Run the examples:

$ cd optaplanner-examples $ mvn exec:java ... -

Edit the sources in your favorite IDE.

2. Quick start

2.1. Overview

Each quick start gets you up and running with OptaPlanner quickly. Pick the quick start that best aligns with your requirements:

-

-

Build a simple Java application that uses OptaPlanner to optimize a school timetable for students and teachers.

-

-

Quarkus Java (recommended)

-

Build a REST application that uses OptaPlanner to optimize a school timetable for students and teachers.

-

Quarkus is an extremely fast platform in the Java ecosystem. It is ideal for rapid incremental development, as well as deployment into the cloud. It also supports native compilation. It also offers increased performance for OptaPlanner, due to build time optimizations.

-

-

-

Build a REST application that uses OptaPlanner to optimize a school timetable for students and teachers.

-

Spring Boot is another platform in the Java ecosystem.

-

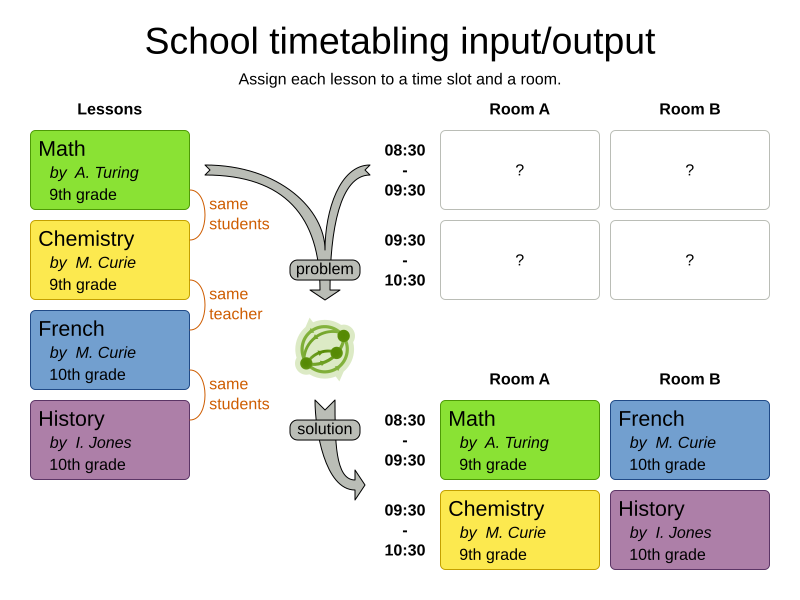

All three quick starts use OptaPlanner to optimize a school timetable for student and teachers:

For other use cases, take a look at the optaplanner-quickstarts repository and the use cases chapter.

2.2. Hello world Java quick start

This guide walks you through the process of creating a simple Java application with OptaPlanner's constraint solving Artificial Intelligence (AI).

2.2.1. What you will build

You will build a command-line application that optimizes a school timetable for students and teachers:

... INFO Solving ended: time spent (5000), best score (0hard/9soft), ... INFO INFO | | Room A | Room B | Room C | INFO |------------|------------|------------|------------| INFO | MON 08:30 | English | Math | | INFO | | I. Jones | A. Turing | | INFO | | 9th grade | 10th grade | | INFO |------------|------------|------------|------------| INFO | MON 09:30 | History | Physics | | INFO | | I. Jones | M. Curie | | INFO | | 9th grade | 10th grade | | INFO |------------|------------|------------|------------| INFO | MON 10:30 | History | Physics | | INFO | | I. Jones | M. Curie | | INFO | | 10th grade | 9th grade | | INFO |------------|------------|------------|------------| ... INFO |------------|------------|------------|------------|

Your application will assign Lesson instances to Timeslot and Room instances automatically

by using AI to adhere to hard and soft scheduling constraints, for example:

-

A room can have at most one lesson at the same time.

-

A teacher can teach at most one lesson at the same time.

-

A student can attend at most one lesson at the same time.

-

A teacher prefers to teach all lessons in the same room.

-

A teacher prefers to teach sequential lessons and dislikes gaps between lessons.

-

A student dislikes sequential lessons on the same subject.

Mathematically speaking, school timetabling is an NP-hard problem. This means it is difficult to scale. Simply brute force iterating through all possible combinations takes millions of years for a non-trivial dataset, even on a supercomputer. Fortunately, AI constraint solvers such as OptaPlanner have advanced algorithms that deliver a near-optimal solution in a reasonable amount of time.

2.2.2. Solution source code

Follow the instructions in the next sections to create the application step by step (recommended).

Alternatively, review the completed example:

-

Complete one of the following tasks:

-

Clone the Git repository:

$ git clone https://github.com/kiegroup/optaplanner-quickstarts -

Download an archive.

-

-

Find the solution in the

hello-worlddirectory. -

Follow the instructions in the README file to run the application.

2.2.3. Prerequisites

To complete this guide, you need:

-

JDK 11+ with

JAVA_HOMEconfigured appropriately -

Apache Maven 3.8.1+ or Gradle 4+

-

An IDE, such as IntelliJ IDEA, VSCode or Eclipse

2.2.4. The build file and the dependencies

Create a Maven or Gradle build file and add these dependencies:

-

optaplanner-core(compile scope) to solve the school timetable problem. -

optaplanner-test(test scope) to JUnit test the school timetabling constraints. -

A logging implementation, such as

logback-classic(runtime scope), to see what OptaPlanner is doing.

If you choose Maven, your pom.xml file has the following content:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.acme</groupId>

<artifactId>optaplanner-hello-world-school-timetabling-quickstart</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<maven.compiler.release>11</maven.compiler.release>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

</properties>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.optaplanner</groupId>

<artifactId>optaplanner-bom</artifactId>

<version>9.44.0.Final</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-classic</artifactId>

<version>1.2.3</version>

</dependency>

</dependencies>

</dependencyManagement>

<dependencies>

<dependency>

<groupId>org.optaplanner</groupId>

<artifactId>optaplanner-core</artifactId>

</dependency>

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-classic</artifactId>

<scope>runtime</scope>

</dependency>

<!-- Testing -->

<dependency>

<groupId>org.optaplanner</groupId>

<artifactId>optaplanner-test</artifactId>

<scope>test</scope>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.codehaus.mojo</groupId>

<artifactId>exec-maven-plugin</artifactId>

<version>3.0.0</version>

<configuration>

<mainClass>org.acme.schooltimetabling.TimeTableApp</mainClass>

</configuration>

</plugin>

</plugins>

</build>

</project>On the other hand, in Gradle, your build.gradle file has this content:

plugins {

id "java"

id "application"

}

def optaplannerVersion = "9.44.0.Final"

def logbackVersion = "1.2.9"

group = "org.acme"

version = "1.0-SNAPSHOT"

repositories {

mavenCentral()

}

dependencies {

implementation platform("org.optaplanner:optaplanner-bom:${optaplannerVersion}")

implementation "org.optaplanner:optaplanner-core"

testImplementation "org.optaplanner:optaplanner-test"

runtimeOnly "ch.qos.logback:logback-classic:${logbackVersion}"

}

java {

sourceCompatibility = JavaVersion.VERSION_11

targetCompatibility = JavaVersion.VERSION_11

}

compileJava {

options.encoding = "UTF-8"

options.compilerArgs << "-parameters"

}

compileTestJava {

options.encoding = "UTF-8"

}

application {

mainClass = "org.acme.schooltimetabling.TimeTableApp"

}

test {

// Log the test execution results.

testLogging {

events "passed", "skipped", "failed"

}

}2.2.5. Model the domain objects

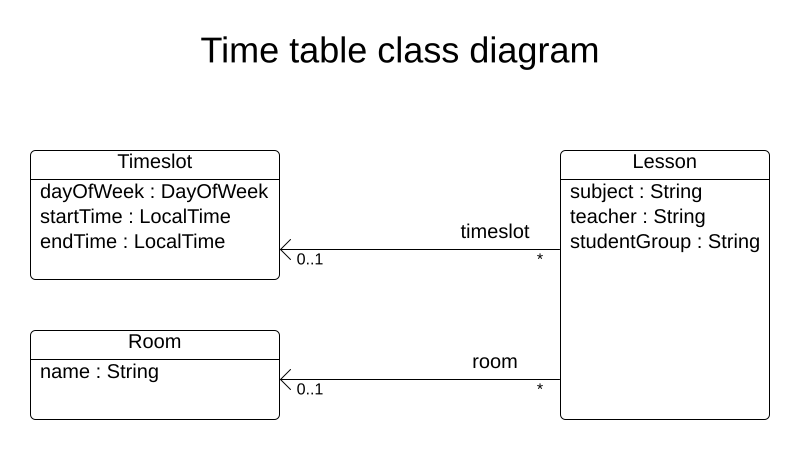

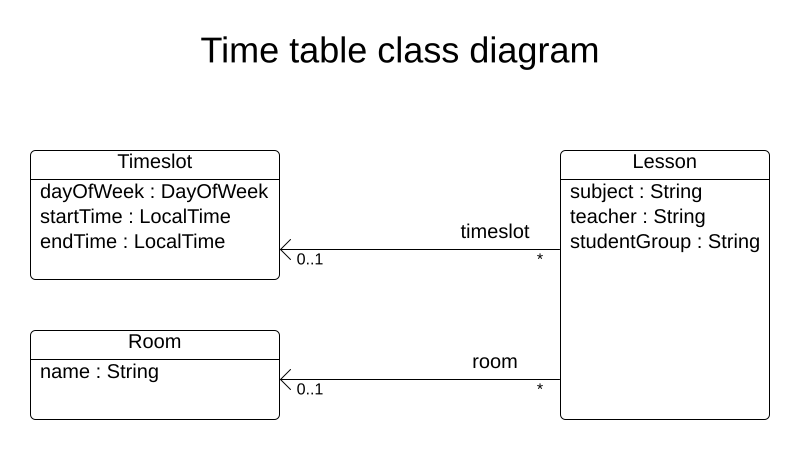

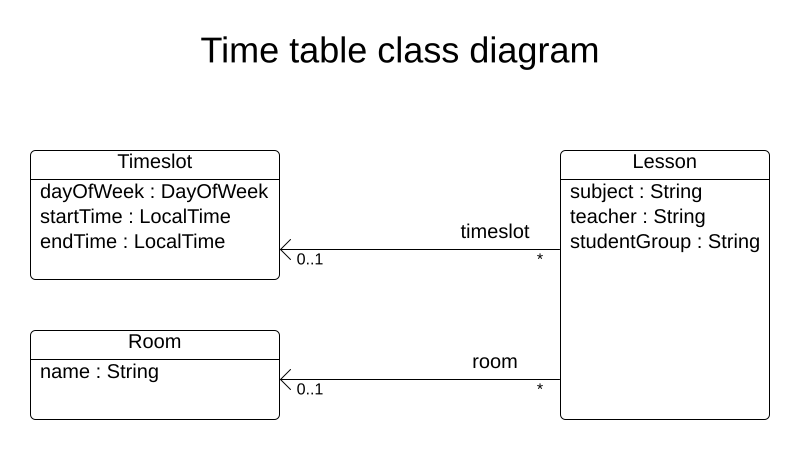

Your goal is to assign each lesson to a time slot and a room. You will create these classes:

2.2.5.1. Timeslot

The Timeslot class represents a time interval when lessons are taught,

for example, Monday 10:30 - 11:30 or Tuesday 13:30 - 14:30.

For simplicity’s sake, all time slots have the same duration

and there are no time slots during lunch or other breaks.

A time slot has no date, because a high school schedule just repeats every week. So there is no need for continuous planning.

Create the src/main/java/org/acme/schooltimetabling/domain/Timeslot.java class:

package org.acme.schooltimetabling.domain;

import java.time.DayOfWeek;

import java.time.LocalTime;

public class Timeslot {

private DayOfWeek dayOfWeek;

private LocalTime startTime;

private LocalTime endTime;

public Timeslot() {

}

public Timeslot(DayOfWeek dayOfWeek, LocalTime startTime, LocalTime endTime) {

this.dayOfWeek = dayOfWeek;

this.startTime = startTime;

this.endTime = endTime;

}

public DayOfWeek getDayOfWeek() {

return dayOfWeek;

}

public LocalTime getStartTime() {

return startTime;

}

public LocalTime getEndTime() {

return endTime;

}

@Override

public String toString() {

return dayOfWeek + " " + startTime;

}

}Because no Timeslot instances change during solving, a Timeslot is called a problem fact.

Such classes do not require any OptaPlanner specific annotations.

Notice the toString() method keeps the output short,

so it is easier to read OptaPlanner’s DEBUG or TRACE log, as shown later.

2.2.5.2. Room

The Room class represents a location where lessons are taught,

for example, Room A or Room B.

For simplicity’s sake, all rooms are without capacity limits

and they can accommodate all lessons.

Create the src/main/java/org/acme/schooltimetabling/domain/Room.java class:

package org.acme.schooltimetabling.domain;

public class Room {

private String name;

public Room() {

}

public Room(String name) {

this.name = name;

}

public String getName() {

return name;

}

@Override

public String toString() {

return name;

}

}Room instances do not change during solving, so Room is also a problem fact.

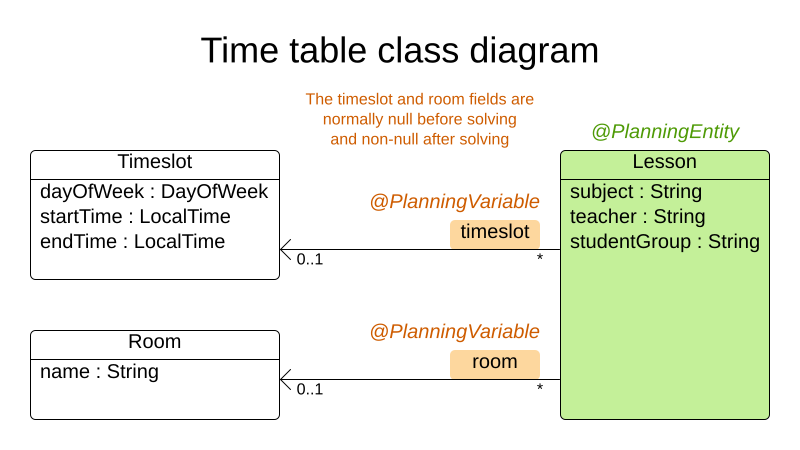

2.2.5.3. Lesson

During a lesson, represented by the Lesson class,

a teacher teaches a subject to a group of students,

for example, Math by A.Turing for 9th grade or Chemistry by M.Curie for 10th grade.

If a subject is taught multiple times per week by the same teacher to the same student group,

there are multiple Lesson instances that are only distinguishable by id.

For example, the 9th grade has six math lessons a week.

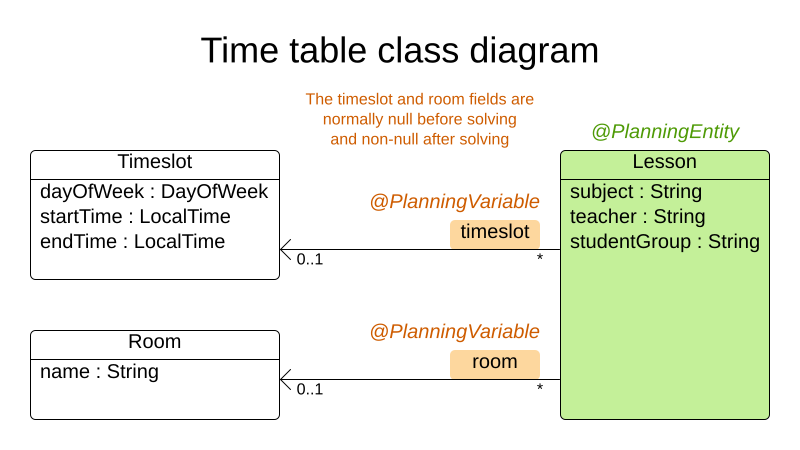

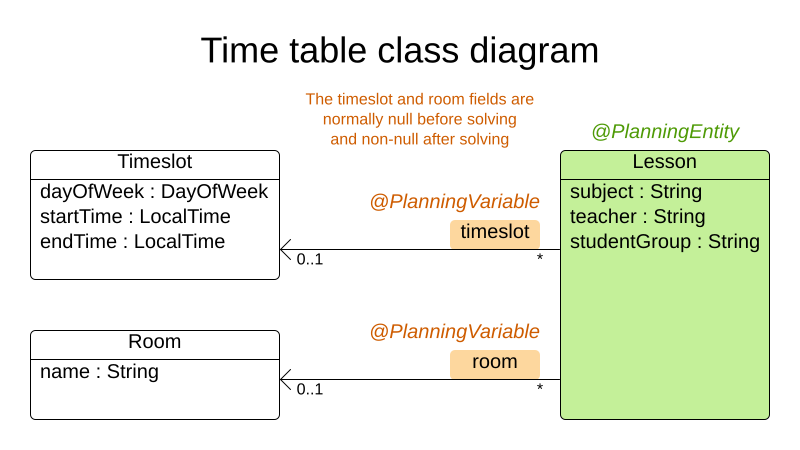

During solving, OptaPlanner changes the timeslot and room fields of the Lesson class,

to assign each lesson to a time slot and a room.

Because OptaPlanner changes these fields, Lesson is a planning entity:

Most of the fields in the previous diagram contain input data, except for the orange fields:

A lesson’s timeslot and room fields are unassigned (null) in the input data

and assigned (not null) in the output data.

OptaPlanner changes these fields during solving.

Such fields are called planning variables.

In order for OptaPlanner to recognize them,

both the timeslot and room fields require an @PlanningVariable annotation.

Their containing class, Lesson, requires an @PlanningEntity annotation.

Create the src/main/java/org/acme/schooltimetabling/domain/Lesson.java class:

package org.acme.schooltimetabling.domain;

import org.optaplanner.core.api.domain.entity.PlanningEntity;

import org.optaplanner.core.api.domain.lookup.PlanningId;

import org.optaplanner.core.api.domain.variable.PlanningVariable;

@PlanningEntity

public class Lesson {

@PlanningId

private Long id;

private String subject;

private String teacher;

private String studentGroup;

@PlanningVariable

private Timeslot timeslot;

@PlanningVariable

private Room room;

public Lesson() {

}

public Lesson(Long id, String subject, String teacher, String studentGroup) {

this.id = id;

this.subject = subject;

this.teacher = teacher;

this.studentGroup = studentGroup;

}

public Long getId() {

return id;

}

public String getSubject() {

return subject;

}

public String getTeacher() {

return teacher;

}

public String getStudentGroup() {

return studentGroup;

}

public Timeslot getTimeslot() {

return timeslot;

}

public void setTimeslot(Timeslot timeslot) {

this.timeslot = timeslot;

}

public Room getRoom() {

return room;

}

public void setRoom(Room room) {

this.room = room;

}

@Override

public String toString() {

return subject + "(" + id + ")";

}

}The Lesson class has an @PlanningEntity annotation,

so OptaPlanner knows that this class changes during solving

because it contains one or more planning variables.

The timeslot field has an @PlanningVariable annotation,

so OptaPlanner knows that it can change its value.

In order to find potential Timeslot instances to assign to this field,

OptaPlanner uses the variable type to connect to a value range provider

that provides a List<Timeslot> to pick from.

The room field also has an @PlanningVariable annotation, for the same reasons.

|

Determining the |

2.2.6. Define the constraints and calculate the score

A score represents the quality of a specific solution. The higher the better. OptaPlanner looks for the best solution, which is the solution with the highest score found in the available time. It might be the optimal solution.

Because this use case has hard and soft constraints,

use the HardSoftScore class to represent the score:

-

Hard constraints must not be broken. For example: A room can have at most one lesson at the same time.

-

Soft constraints should not be broken. For example: A teacher prefers to teach in a single room.

Hard constraints are weighted against other hard constraints. Soft constraints are weighted too, against other soft constraints. Hard constraints always outweigh soft constraints, regardless of their respective weights.

To calculate the score, you could implement an EasyScoreCalculator class:

public class TimeTableEasyScoreCalculator implements EasyScoreCalculator<TimeTable, HardSoftScore> {

@Override

public HardSoftScore calculateScore(TimeTable timeTable) {

List<Lesson> lessonList = timeTable.getLessonList();

int hardScore = 0;

for (Lesson a : lessonList) {

for (Lesson b : lessonList) {

if (a.getTimeslot() != null && a.getTimeslot().equals(b.getTimeslot())

&& a.getId() < b.getId()) {

// A room can accommodate at most one lesson at the same time.

if (a.getRoom() != null && a.getRoom().equals(b.getRoom())) {

hardScore--;

}

// A teacher can teach at most one lesson at the same time.

if (a.getTeacher().equals(b.getTeacher())) {

hardScore--;

}

// A student can attend at most one lesson at the same time.

if (a.getStudentGroup().equals(b.getStudentGroup())) {

hardScore--;

}

}

}

}

int softScore = 0;

// Soft constraints are only implemented in the optaplanner-quickstarts code

return HardSoftScore.of(hardScore, softScore);

}

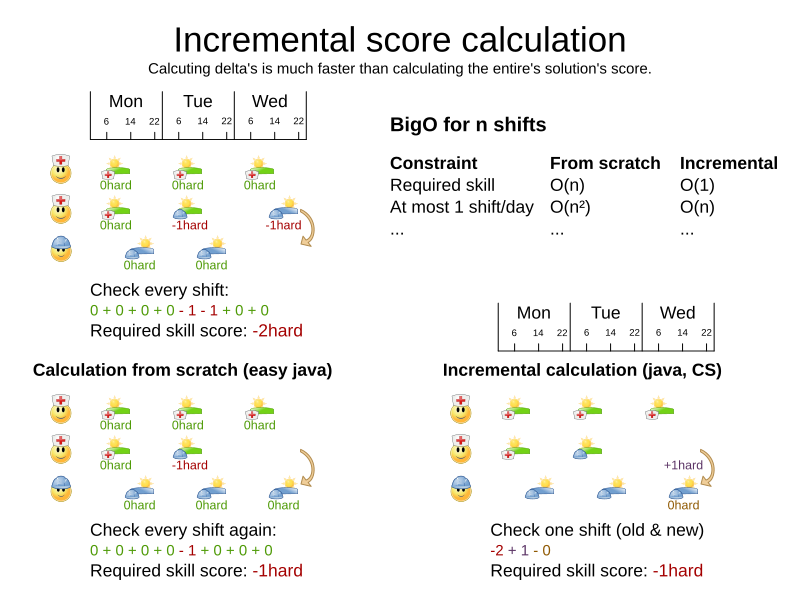

}Unfortunately that does not scale well, because it is non-incremental: every time a lesson is assigned to a different time slot or room, all lessons are re-evaluated to calculate the new score.

Instead, create a src/main/java/org/acme/schooltimetabling/solver/TimeTableConstraintProvider.java class

to perform incremental score calculation.

It uses OptaPlanner’s ConstraintStream API which is inspired by Java Streams and SQL:

package org.acme.schooltimetabling.solver;

import org.acme.schooltimetabling.domain.Lesson;

import org.optaplanner.core.api.score.buildin.hardsoft.HardSoftScore;

import org.optaplanner.core.api.score.stream.Constraint;

import org.optaplanner.core.api.score.stream.ConstraintFactory;

import org.optaplanner.core.api.score.stream.ConstraintProvider;

import org.optaplanner.core.api.score.stream.Joiners;

public class TimeTableConstraintProvider implements ConstraintProvider {

@Override

public Constraint[] defineConstraints(ConstraintFactory constraintFactory) {

return new Constraint[] {

// Hard constraints

roomConflict(constraintFactory),

teacherConflict(constraintFactory),

studentGroupConflict(constraintFactory),

// Soft constraints are only implemented in the optaplanner-quickstarts code

};

}

private Constraint roomConflict(ConstraintFactory constraintFactory) {

// A room can accommodate at most one lesson at the same time.

// Select a lesson ...

return constraintFactory

.forEach(Lesson.class)

// ... and pair it with another lesson ...

.join(Lesson.class,

// ... in the same timeslot ...

Joiners.equal(Lesson::getTimeslot),

// ... in the same room ...

Joiners.equal(Lesson::getRoom),

// ... and the pair is unique (different id, no reverse pairs) ...

Joiners.lessThan(Lesson::getId))

// ... then penalize each pair with a hard weight.

.penalize(HardSoftScore.ONE_HARD)

.asConstraint("Room conflict");

}

private Constraint teacherConflict(ConstraintFactory constraintFactory) {

// A teacher can teach at most one lesson at the same time.

return constraintFactory.forEach(Lesson.class)

.join(Lesson.class,

Joiners.equal(Lesson::getTimeslot),

Joiners.equal(Lesson::getTeacher),

Joiners.lessThan(Lesson::getId))

.penalize(HardSoftScore.ONE_HARD)

.asConstraint("Teacher conflict");

}

private Constraint studentGroupConflict(ConstraintFactory constraintFactory) {

// A student can attend at most one lesson at the same time.

return constraintFactory.forEach(Lesson.class)

.join(Lesson.class,

Joiners.equal(Lesson::getTimeslot),

Joiners.equal(Lesson::getStudentGroup),

Joiners.lessThan(Lesson::getId))

.penalize(HardSoftScore.ONE_HARD)

.asConstraint("Student group conflict");

}

}The ConstraintProvider scales an order of magnitude better than the EasyScoreCalculator: O(n) instead of O(n²).

2.2.7. Gather the domain objects in a planning solution

A TimeTable wraps all Timeslot, Room, and Lesson instances of a single dataset.

Furthermore, because it contains all lessons, each with a specific planning variable state,

it is a planning solution and it has a score:

-

If lessons are still unassigned, then it is an uninitialized solution, for example, a solution with the score

-4init/0hard/0soft. -

If it breaks hard constraints, then it is an infeasible solution, for example, a solution with the score

-2hard/-3soft. -

If it adheres to all hard constraints, then it is a feasible solution, for example, a solution with the score

0hard/-7soft.

Create the src/main/java/org/acme/schooltimetabling/domain/TimeTable.java class:

package org.acme.schooltimetabling.domain;

import java.util.List;

import org.optaplanner.core.api.domain.solution.PlanningEntityCollectionProperty;

import org.optaplanner.core.api.domain.solution.PlanningScore;

import org.optaplanner.core.api.domain.solution.PlanningSolution;

import org.optaplanner.core.api.domain.solution.ProblemFactCollectionProperty;

import org.optaplanner.core.api.domain.valuerange.ValueRangeProvider;

import org.optaplanner.core.api.score.buildin.hardsoft.HardSoftScore;

@PlanningSolution

public class TimeTable {

@ValueRangeProvider

@ProblemFactCollectionProperty

private List<Timeslot> timeslotList;

@ValueRangeProvider

@ProblemFactCollectionProperty

private List<Room> roomList;

@PlanningEntityCollectionProperty

private List<Lesson> lessonList;

@PlanningScore

private HardSoftScore score;

public TimeTable() {

}

public TimeTable(List<Timeslot> timeslotList, List<Room> roomList, List<Lesson> lessonList) {

this.timeslotList = timeslotList;

this.roomList = roomList;

this.lessonList = lessonList;

}

public List<Timeslot> getTimeslotList() {

return timeslotList;

}

public List<Room> getRoomList() {

return roomList;

}

public List<Lesson> getLessonList() {

return lessonList;

}

public HardSoftScore getScore() {

return score;

}

}The TimeTable class has an @PlanningSolution annotation,

so OptaPlanner knows that this class contains all of the input and output data.

Specifically, this class is the input of the problem:

-

A

timeslotListfield with all time slots-

This is a list of problem facts, because they do not change during solving.

-

-

A

roomListfield with all rooms-

This is a list of problem facts, because they do not change during solving.

-

-

A

lessonListfield with all lessons-

This is a list of planning entities, because they change during solving.

-

Of each

Lesson:-

The values of the

timeslotandroomfields are typically stillnull, so unassigned. They are planning variables. -

The other fields, such as

subject,teacherandstudentGroup, are filled in. These fields are problem properties.

-

-

However, this class is also the output of the solution:

-

A

lessonListfield for which eachLessoninstance has non-nulltimeslotandroomfields after solving -

A

scorefield that represents the quality of the output solution, for example,0hard/-5soft

2.2.7.1. The value range providers

The timeslotList field is a value range provider.

It holds the Timeslot instances which OptaPlanner can pick from to assign to the timeslot field of Lesson instances.

The timeslotList field has an @ValueRangeProvider annotation to connect the @PlanningVariable with the @ValueRangeProvider,

by matching the type of the planning variable with the type returned by the value range provider.

Following the same logic, the roomList field also has an @ValueRangeProvider annotation.

2.2.7.2. The problem fact and planning entity properties

Furthermore, OptaPlanner needs to know which Lesson instances it can change

as well as how to retrieve the Timeslot and Room instances used for score calculation

by your TimeTableConstraintProvider.

The timeslotList and roomList fields have an @ProblemFactCollectionProperty annotation,

so your TimeTableConstraintProvider can select from those instances.

The lessonList has an @PlanningEntityCollectionProperty annotation,

so OptaPlanner can change them during solving

and your TimeTableConstraintProvider can select from those too.

2.2.8. Create the application

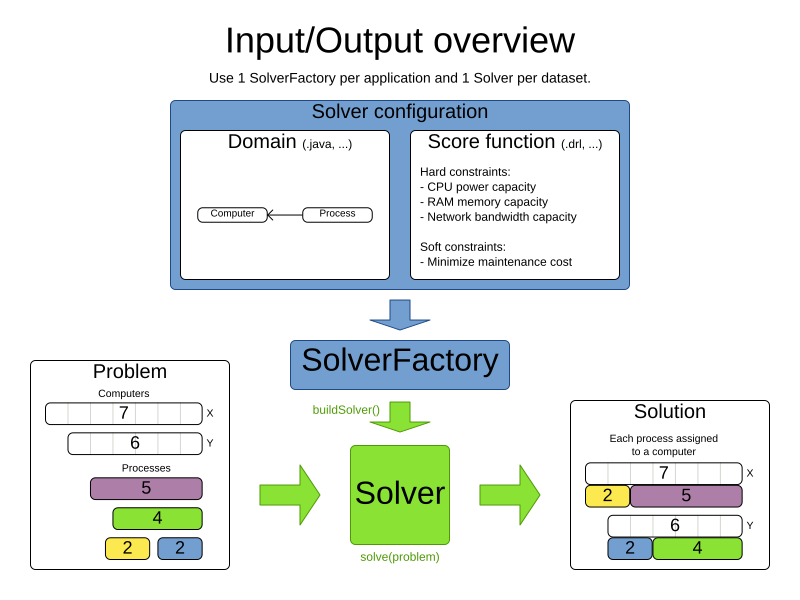

Now you are ready to put everything together and create a Java application.

The main() method performs the following tasks:

-

Creates the

SolverFactoryto build aSolverper dataset. -

Loads a dataset.

-

Solves it with

Solver.solve(). -

Visualizes the solution for that dataset.

Typically, an application has a single SolverFactory

to build a new Solver instance for each problem dataset to solve.

A SolverFactory is thread-safe, but a Solver is not.

In this case, there is only one dataset, so only one Solver instance.

Create the src/main/java/org/acme/schooltimetabling/TimeTableApp.java class:

package org.acme.schooltimetabling;

import java.time.DayOfWeek;

import java.time.Duration;

import java.time.LocalTime;

import java.util.ArrayList;

import java.util.Collections;

import java.util.List;

import java.util.Map;

import java.util.stream.Collectors;

import org.acme.schooltimetabling.domain.Lesson;

import org.acme.schooltimetabling.domain.Room;

import org.acme.schooltimetabling.domain.TimeTable;

import org.acme.schooltimetabling.domain.Timeslot;

import org.acme.schooltimetabling.solver.TimeTableConstraintProvider;

import org.optaplanner.core.api.solver.Solver;

import org.optaplanner.core.api.solver.SolverFactory;

import org.optaplanner.core.config.solver.SolverConfig;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class TimeTableApp {

private static final Logger LOGGER = LoggerFactory.getLogger(TimeTableApp.class);

public static void main(String[] args) {

SolverFactory<TimeTable> solverFactory = SolverFactory.create(new SolverConfig()

.withSolutionClass(TimeTable.class)

.withEntityClasses(Lesson.class)

.withConstraintProviderClass(TimeTableConstraintProvider.class)

// The solver runs only for 5 seconds on this small dataset.

// It's recommended to run for at least 5 minutes ("5m") otherwise.

.withTerminationSpentLimit(Duration.ofSeconds(5)));

// Load the problem

TimeTable problem = generateDemoData();

// Solve the problem

Solver<TimeTable> solver = solverFactory.buildSolver();

TimeTable solution = solver.solve(problem);

// Visualize the solution

printTimetable(solution);

}

public static TimeTable generateDemoData() {

List<Timeslot> timeslotList = new ArrayList<>(10);

timeslotList.add(new Timeslot(DayOfWeek.MONDAY, LocalTime.of(8, 30), LocalTime.of(9, 30)));

timeslotList.add(new Timeslot(DayOfWeek.MONDAY, LocalTime.of(9, 30), LocalTime.of(10, 30)));

timeslotList.add(new Timeslot(DayOfWeek.MONDAY, LocalTime.of(10, 30), LocalTime.of(11, 30)));

timeslotList.add(new Timeslot(DayOfWeek.MONDAY, LocalTime.of(13, 30), LocalTime.of(14, 30)));

timeslotList.add(new Timeslot(DayOfWeek.MONDAY, LocalTime.of(14, 30), LocalTime.of(15, 30)));

timeslotList.add(new Timeslot(DayOfWeek.TUESDAY, LocalTime.of(8, 30), LocalTime.of(9, 30)));

timeslotList.add(new Timeslot(DayOfWeek.TUESDAY, LocalTime.of(9, 30), LocalTime.of(10, 30)));

timeslotList.add(new Timeslot(DayOfWeek.TUESDAY, LocalTime.of(10, 30), LocalTime.of(11, 30)));

timeslotList.add(new Timeslot(DayOfWeek.TUESDAY, LocalTime.of(13, 30), LocalTime.of(14, 30)));

timeslotList.add(new Timeslot(DayOfWeek.TUESDAY, LocalTime.of(14, 30), LocalTime.of(15, 30)));

List<Room> roomList = new ArrayList<>(3);

roomList.add(new Room("Room A"));

roomList.add(new Room("Room B"));

roomList.add(new Room("Room C"));

List<Lesson> lessonList = new ArrayList<>();

long id = 0;

lessonList.add(new Lesson(id++, "Math", "A. Turing", "9th grade"));

lessonList.add(new Lesson(id++, "Math", "A. Turing", "9th grade"));

lessonList.add(new Lesson(id++, "Physics", "M. Curie", "9th grade"));

lessonList.add(new Lesson(id++, "Chemistry", "M. Curie", "9th grade"));

lessonList.add(new Lesson(id++, "Biology", "C. Darwin", "9th grade"));

lessonList.add(new Lesson(id++, "History", "I. Jones", "9th grade"));

lessonList.add(new Lesson(id++, "English", "I. Jones", "9th grade"));

lessonList.add(new Lesson(id++, "English", "I. Jones", "9th grade"));

lessonList.add(new Lesson(id++, "Spanish", "P. Cruz", "9th grade"));

lessonList.add(new Lesson(id++, "Spanish", "P. Cruz", "9th grade"));

lessonList.add(new Lesson(id++, "Math", "A. Turing", "10th grade"));

lessonList.add(new Lesson(id++, "Math", "A. Turing", "10th grade"));

lessonList.add(new Lesson(id++, "Math", "A. Turing", "10th grade"));

lessonList.add(new Lesson(id++, "Physics", "M. Curie", "10th grade"));

lessonList.add(new Lesson(id++, "Chemistry", "M. Curie", "10th grade"));

lessonList.add(new Lesson(id++, "French", "M. Curie", "10th grade"));

lessonList.add(new Lesson(id++, "Geography", "C. Darwin", "10th grade"));

lessonList.add(new Lesson(id++, "History", "I. Jones", "10th grade"));

lessonList.add(new Lesson(id++, "English", "P. Cruz", "10th grade"));

lessonList.add(new Lesson(id++, "Spanish", "P. Cruz", "10th grade"));

return new TimeTable(timeslotList, roomList, lessonList);

}

private static void printTimetable(TimeTable timeTable) {

LOGGER.info("");

List<Room> roomList = timeTable.getRoomList();

List<Lesson> lessonList = timeTable.getLessonList();

Map<Timeslot, Map<Room, List<Lesson>>> lessonMap = lessonList.stream()

.filter(lesson -> lesson.getTimeslot() != null && lesson.getRoom() != null)

.collect(Collectors.groupingBy(Lesson::getTimeslot, Collectors.groupingBy(Lesson::getRoom)));

LOGGER.info("| | " + roomList.stream()

.map(room -> String.format("%-10s", room.getName())).collect(Collectors.joining(" | ")) + " |");

LOGGER.info("|" + "------------|".repeat(roomList.size() + 1));

for (Timeslot timeslot : timeTable.getTimeslotList()) {

List<List<Lesson>> cellList = roomList.stream()

.map(room -> {

Map<Room, List<Lesson>> byRoomMap = lessonMap.get(timeslot);

if (byRoomMap == null) {

return Collections.<Lesson>emptyList();

}

List<Lesson> cellLessonList = byRoomMap.get(room);

if (cellLessonList == null) {

return Collections.<Lesson>emptyList();

}

return cellLessonList;

})

.collect(Collectors.toList());

LOGGER.info("| " + String.format("%-10s",

timeslot.getDayOfWeek().toString().substring(0, 3) + " " + timeslot.getStartTime()) + " | "

+ cellList.stream().map(cellLessonList -> String.format("%-10s",

cellLessonList.stream().map(Lesson::getSubject).collect(Collectors.joining(", "))))

.collect(Collectors.joining(" | "))

+ " |");

LOGGER.info("| | "

+ cellList.stream().map(cellLessonList -> String.format("%-10s",

cellLessonList.stream().map(Lesson::getTeacher).collect(Collectors.joining(", "))))

.collect(Collectors.joining(" | "))

+ " |");

LOGGER.info("| | "

+ cellList.stream().map(cellLessonList -> String.format("%-10s",

cellLessonList.stream().map(Lesson::getStudentGroup).collect(Collectors.joining(", "))))

.collect(Collectors.joining(" | "))

+ " |");

LOGGER.info("|" + "------------|".repeat(roomList.size() + 1));

}

List<Lesson> unassignedLessons = lessonList.stream()

.filter(lesson -> lesson.getTimeslot() == null || lesson.getRoom() == null)

.collect(Collectors.toList());

if (!unassignedLessons.isEmpty()) {

LOGGER.info("");

LOGGER.info("Unassigned lessons");

for (Lesson lesson : unassignedLessons) {

LOGGER.info(" " + lesson.getSubject() + " - " + lesson.getTeacher() + " - " + lesson.getStudentGroup());

}

}

}

}The main() method first creates the SolverFactory:

SolverFactory<TimeTable> solverFactory = SolverFactory.create(new SolverConfig()

.withSolutionClass(TimeTable.class)

.withEntityClasses(Lesson.class)

.withConstraintProviderClass(TimeTableConstraintProvider.class)

// The solver runs only for 5 seconds on this small dataset.

// It's recommended to run for at least 5 minutes ("5m") otherwise.

.withTerminationSpentLimit(Duration.ofSeconds(5)));This registers the @PlanningSolution class, the @PlanningEntity classes,

and the ConstraintProvider class, all of which you created earlier.

Without a termination setting or a terminationEarly() event, the solver runs forever.

To avoid that, the solver limits the solving time to five seconds.

After five seconds, the main() method loads the problem, solves it, and prints the solution:

// Load the problem

TimeTable problem = generateDemoData();

// Solve the problem

Solver<TimeTable> solver = solverFactory.buildSolver();

TimeTable solution = solver.solve(problem);

// Visualize the solution

printTimetable(solution);The solve() method doesn’t return instantly.

It runs for five seconds before returning the best solution.

OptaPlanner returns the best solution found in the available termination time. Due to the nature of NP-hard problems, the best solution might not be optimal, especially for larger datasets. Increase the termination time to potentially find a better solution.

The generateDemoData() method generates the school timetable problem to solve.

The printTimetable() method pretty prints the timetable to the console,

so it’s easy to determine visually whether or not it’s a good schedule.

2.2.8.1. Configure logging

To see any output in the console, logging must be configured properly.

Create the src/main/resource/logback.xml file:

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<appender name="consoleAppender" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>%d{HH:mm:ss.SSS} [%-12.12t] %-5p %m%n</pattern>

</encoder>

</appender>

<logger name="org.optaplanner" level="info"/>

<root level="info">

<appender-ref ref="consoleAppender" />

</root>

</configuration>2.2.9. Run the application

2.2.9.1. Run the application in IDE

Run that TimeTableApp class as the main class of a normal Java application:

... INFO | | Room A | Room B | Room C | INFO |------------|------------|------------|------------| INFO | MON 08:30 | English | Math | | INFO | | I. Jones | A. Turing | | INFO | | 9th grade | 10th grade | | INFO |------------|------------|------------|------------| INFO | MON 09:30 | History | Physics | | INFO | | I. Jones | M. Curie | | INFO | | 9th grade | 10th grade | | ...

Verify the console output. Does it conform to all hard constraints?

What happens if you comment out the roomConflict constraint in TimeTableConstraintProvider?

The info log shows what OptaPlanner did in those five seconds:

... Solving started: time spent (33), best score (-8init/0hard/0soft), environment mode (REPRODUCIBLE), random (JDK with seed 0).

... Construction Heuristic phase (0) ended: time spent (73), best score (0hard/0soft), score calculation speed (459/sec), step total (4).

... Local Search phase (1) ended: time spent (5000), best score (0hard/0soft), score calculation speed (28949/sec), step total (28398).

... Solving ended: time spent (5000), best score (0hard/0soft), score calculation speed (28524/sec), phase total (2), environment mode (REPRODUCIBLE).2.2.9.2. Test the application

A good application includes test coverage.

2.2.9.2.1. Test the constraints

To test each constraint in isolation, use a ConstraintVerifier in unit tests.

This tests each constraint’s corner cases in isolation from the other tests,

which lowers maintenance when adding a new constraint with proper test coverage.

Create the src/test/java/org/acme/schooltimetabling/solver/TimeTableConstraintProviderTest.java class:

package org.acme.schooltimetabling.solver;

import java.time.DayOfWeek;

import java.time.LocalTime;

import org.acme.schooltimetabling.domain.Lesson;

import org.acme.schooltimetabling.domain.Room;

import org.acme.schooltimetabling.domain.TimeTable;

import org.acme.schooltimetabling.domain.Timeslot;

import org.junit.jupiter.api.Test;

import org.optaplanner.test.api.score.stream.ConstraintVerifier;

class TimeTableConstraintProviderTest {

private static final Room ROOM1 = new Room("Room1");

private static final Timeslot TIMESLOT1 = new Timeslot(DayOfWeek.MONDAY, LocalTime.NOON);

private static final Timeslot TIMESLOT2 = new Timeslot(DayOfWeek.TUESDAY, LocalTime.NOON);

ConstraintVerifier<TimeTableConstraintProvider, TimeTable> constraintVerifier = ConstraintVerifier.build(

new TimeTableConstraintProvider(), TimeTable.class, Lesson.class);

@Test

void roomConflict() {

Lesson firstLesson = new Lesson(1, "Subject1", "Teacher1", "Group1", TIMESLOT1, ROOM1);

Lesson conflictingLesson = new Lesson(2, "Subject2", "Teacher2", "Group2", TIMESLOT1, ROOM1);

Lesson nonConflictingLesson = new Lesson(3, "Subject3", "Teacher3", "Group3", TIMESLOT2, ROOM1);

constraintVerifier.verifyThat(TimeTableConstraintProvider::roomConflict)

.given(firstLesson, conflictingLesson, nonConflictingLesson)

.penalizesBy(1);

}

}This test verifies that the constraint TimeTableConstraintProvider::roomConflict penalizes with a match weight of 1

when given three lessons in the same room, where two lessons have the same timeslot.

Therefore, a constraint weight of 10hard would reduce the score by -10hard.

Notice how ConstraintVerifier ignores the constraint weight during testing - even

if those constraint weights are hard coded in the ConstraintProvider - because

constraints weights change regularly before going into production.

This way, constraint weight tweaking does not break the unit tests.

For more, see Testing Constraint Streams.

2.2.9.3. Logging

When adding constraints in your ConstraintProvider,

keep an eye on the score calculation speed in the info log,

after solving for the same amount of time, to assess the performance impact:

... Solving ended: ..., score calculation speed (29455/sec), ...To understand how OptaPlanner is solving your problem internally,

change the logging in the logback.xml file:

<logger name="org.optaplanner" level="debug"/>Use debug logging to show every step:

... Solving started: time spent (67), best score (-20init/0hard/0soft), environment mode (REPRODUCIBLE), random (JDK with seed 0).

... CH step (0), time spent (128), score (-18init/0hard/0soft), selected move count (15), picked move ([Math(101) {null -> Room A}, Math(101) {null -> MONDAY 08:30}]).

... CH step (1), time spent (145), score (-16init/0hard/0soft), selected move count (15), picked move ([Physics(102) {null -> Room A}, Physics(102) {null -> MONDAY 09:30}]).

...Use trace logging to show every step and every move per step.

2.2.9.4. Make a standalone application

In order to run the application outside an IDE easily, you will need to make some changes to the configuration of your build tool.

2.2.9.4.1. Executable JAR in Maven

In Maven, add the following to your pom.xml:

...

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>${version.assembly.plugin}</version>

<configuration>

<finalName>hello-world-run</finalName>

<appendAssemblyId>false</appendAssemblyId>

<descriptors>

<descriptor>src/assembly/jar-with-dependencies-and-services.xml</descriptor>

</descriptors>

<archive>

<manifestEntries>

<Main-Class>org.acme.schooltimetabling.TimeTableApp</Main-Class>

<Multi-Release>true</Multi-Release>

</manifestEntries>

</archive>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

...

</plugins>

...

</build>

...Also, create a new file in src/assembly directory called jar-with-dependencies-and-services.xml with the following contents:

<assembly xmlns="http://maven.apache.org/ASSEMBLY/2.1.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/ASSEMBLY/2.1.0 http://maven.apache.org/xsd/assembly-2.1.0.xsd">

<id>jar-with-dependencies-and-services</id>

<formats>

<format>jar</format>

</formats>

<containerDescriptorHandlers>

<containerDescriptorHandler>

<handlerName>metaInf-services</handlerName>

</containerDescriptorHandler>

</containerDescriptorHandlers>

<includeBaseDirectory>false</includeBaseDirectory>

<dependencySets>

<dependencySet>

<outputDirectory>/</outputDirectory>

<useProjectArtifact>true</useProjectArtifact>

<unpack>true</unpack>

<scope>runtime</scope>

</dependencySet>

</dependencySets>

</assembly>This enables the Maven Assembly Plugin and tells it to do the following:

-

Take all dependencies of your project and put their classes and resources into a new JAR.

-

If any of the dependencies use Java SPI, it properly bundles all the service descriptors.

-

If any of the dependencies are multi-release JARs, it takes that into account.

-

-

Set that JAR’s main class to be

org.acme.schooltimetabling.TimeTableApp. -

Make that JAR available as

hello-world-run.jarin your project’s build directory, most likelytarget/.

This executable JAR can be run like any other JAR:

$ mvn clean install

...

$ java -jar target/hello-world-run.jar2.2.9.4.2. Executable application in Gradle

In Gradle, add the following to your build.gradle:

application {

mainClass = "org.acme.schooltimetabling.TimeTableApp"

}After building the project, you can find an archive with a runnable application inside the build/distributions/ directory.

2.2.10. Summary

Congratulations! You have just developed a Java application with OptaPlanner!

If you ran into any issues, take a look at the quickstart source code.

Read the next guide to build a pretty web application for school timetabling with a REST service and database integration, by leveraging Quarkus.

2.3. Quarkus Java quick start

This guide walks you through the process of creating a Quarkus application with OptaPlanner's constraint solving Artificial Intelligence (AI).

2.3.1. What you will build

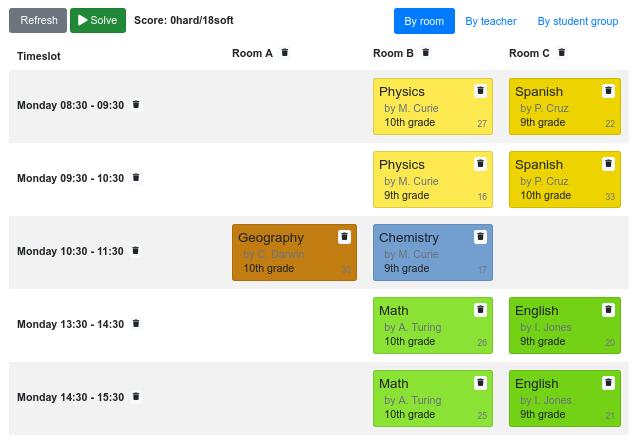

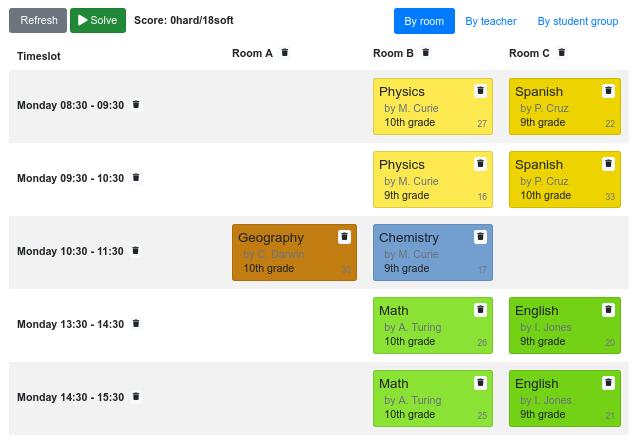

You will build a REST application that optimizes a school timetable for students and teachers:

Your service will assign Lesson instances to Timeslot and Room instances automatically

by using AI to adhere to hard and soft scheduling constraints, such as the following examples:

-

A room can have at most one lesson at the same time.

-

A teacher can teach at most one lesson at the same time.

-

A student can attend at most one lesson at the same time.

-

A teacher prefers to teach all lessons in the same room.

-

A teacher prefers to teach sequential lessons and dislikes gaps between lessons.

-

A student dislikes sequential lessons on the same subject.

Mathematically speaking, school timetabling is an NP-hard problem. This means it is difficult to scale. Simply brute force iterating through all possible combinations takes millions of years for a non-trivial dataset, even on a supercomputer. Luckily, AI constraint solvers such as OptaPlanner have advanced algorithms that deliver a near-optimal solution in a reasonable amount of time.

2.3.2. Solution source code

Follow the instructions in the next sections to create the application step by step (recommended).

Alternatively, you can also skip right to the completed example:

-

Clone the Git repository:

$ git clone https://github.com/kiegroup/optaplanner-quickstartsor download an archive.

-

Find the solution in the

use-casesdirectory and run it (see its README file).

2.3.3. Prerequisites

To complete this guide, you need:

-

JDK 11+ with

JAVA_HOMEconfigured appropriately -

Apache Maven 3.8.1+ or Gradle 4+

-

An IDE, such as IntelliJ IDEA, VSCode or Eclipse

2.3.4. The build file and the dependencies

Use code.quarkus.io to generate an application with the following extensions, for Maven or Gradle:

-

RESTEasy JAX-RS (

quarkus-resteasy) -

RESTEasy Jackson (

quarkus-resteasy-jackson) -

OptaPlanner (

optaplanner-quarkus) -

OptaPlanner Jackson (

optaplanner-quarkus-jackson)

Alternatively, generate it from the command line with Maven:

$ mvn io.quarkus:quarkus-maven-plugin:3.0.0.Final:create \

-DprojectGroupId=org.acme \

-DprojectArtifactId=optaplanner-quickstart \

-Dextensions="resteasy,resteasy-jackson,optaplanner-quarkus,optaplanner-quarkus-jackson" \

-DnoExamples

$ cd optaplanner-quickstartIf you choose Maven, your pom.xml file has the following content:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.acme</groupId>

<artifactId>optaplanner-quarkus-school-timetabling-quickstart</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<maven.compiler.release>11</maven.compiler.release>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<version.io.quarkus>3.0.0.Final</version.io.quarkus>

<version.org.optaplanner>9.44.0.Final</version.org.optaplanner>

</properties>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-bom</artifactId>

<version>${version.io.quarkus}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupId>org.optaplanner</groupId>

<artifactId>optaplanner-bom</artifactId>

<version>${version.org.optaplanner}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<dependencies>

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-resteasy</artifactId>

</dependency>

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-resteasy-jackson</artifactId>

</dependency>

<dependency>

<groupId>org.optaplanner</groupId>

<artifactId>optaplanner-quarkus</artifactId>

</dependency>

<dependency>

<groupId>org.optaplanner</groupId>

<artifactId>optaplanner-quarkus-jackson</artifactId>

</dependency>

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-junit5</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.optaplanner</groupId>

<artifactId>optaplanner-test</artifactId>

<scope>test</scope>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-maven-plugin</artifactId>

<version>${version.io.quarkus}</version>

<executions>

<execution>

<goals>

<goal>build</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin>

<artifactId>maven-surefire-plugin</artifactId>

<configuration>

<systemPropertyVariables>

<java.util.logging.manager>org.jboss.logmanager.LogManager</java.util.logging.manager>

</systemPropertyVariables>

</configuration>

</plugin>

</plugins>

</build>

</project>On the other hand, in Gradle, your build.gradle file has this content:

plugins {

id "java"

id "io.quarkus" version "3.0.0.Final"

}

def quarkusVersion = "3.0.0.Final"

def optaplannerVersion = "9.44.0.Final"

group = "org.acme"

version = "1.0-SNAPSHOT"

repositories {

mavenCentral()

}

dependencies {

implementation platform("io.quarkus:quarkus-bom:${quarkusVersion}")

implementation "io.quarkus:quarkus-resteasy"

implementation "io.quarkus:quarkus-resteasy-jackson"

testImplementation "io.quarkus:quarkus-junit5"

implementation platform("org.optaplanner:optaplanner-bom:${optaplannerVersion}")

implementation "org.optaplanner:optaplanner-quarkus"

implementation "org.optaplanner:optaplanner-quarkus-jackson"

testImplementation "org.optaplanner:optaplanner-test"

}

java {

sourceCompatibility = JavaVersion.VERSION_11

targetCompatibility = JavaVersion.VERSION_11

}

compileJava {

options.encoding = "UTF-8"

options.compilerArgs << "-parameters"

}

compileTestJava {

options.encoding = "UTF-8"

}

test {

systemProperty "java.util.logging.manager", "org.jboss.logmanager.LogManager"

}2.3.5. Model the domain objects

Your goal is to assign each lesson to a time slot and a room. You will create these classes:

2.3.5.1. Timeslot

The Timeslot class represents a time interval when lessons are taught,

for example, Monday 10:30 - 11:30 or Tuesday 13:30 - 14:30.

For simplicity’s sake, all time slots have the same duration

and there are no time slots during lunch or other breaks.

A time slot has no date, because a high school schedule just repeats every week. So there is no need for continuous planning.

Create the src/main/java/org/acme/schooltimetabling/domain/Timeslot.java class:

package org.acme.schooltimetabling.domain;

import java.time.DayOfWeek;

import java.time.LocalTime;

public class Timeslot {

private DayOfWeek dayOfWeek;

private LocalTime startTime;

private LocalTime endTime;

public Timeslot() {

}

public Timeslot(DayOfWeek dayOfWeek, LocalTime startTime, LocalTime endTime) {

this.dayOfWeek = dayOfWeek;

this.startTime = startTime;

this.endTime = endTime;

}

public DayOfWeek getDayOfWeek() {

return dayOfWeek;

}

public LocalTime getStartTime() {

return startTime;

}

public LocalTime getEndTime() {

return endTime;

}

@Override

public String toString() {

return dayOfWeek + " " + startTime;

}

}Because no Timeslot instances change during solving, a Timeslot is called a problem fact.

Such classes do not require any OptaPlanner specific annotations.

Notice the toString() method keeps the output short,

so it is easier to read OptaPlanner’s DEBUG or TRACE log, as shown later.

2.3.5.2. Room

The Room class represents a location where lessons are taught,

for example, Room A or Room B.

For simplicity’s sake, all rooms are without capacity limits

and they can accommodate all lessons.

Create the src/main/java/org/acme/schooltimetabling/domain/Room.java class:

package org.acme.schooltimetabling.domain;

public class Room {

private String name;

public Room() {

}

public Room(String name) {

this.name = name;

}

public String getName() {

return name;

}

@Override

public String toString() {

return name;

}

}Room instances do not change during solving, so Room is also a problem fact.

2.3.5.3. Lesson

During a lesson, represented by the Lesson class,

a teacher teaches a subject to a group of students,

for example, Math by A.Turing for 9th grade or Chemistry by M.Curie for 10th grade.

If a subject is taught multiple times per week by the same teacher to the same student group,

there are multiple Lesson instances that are only distinguishable by id.

For example, the 9th grade has six math lessons a week.

During solving, OptaPlanner changes the timeslot and room fields of the Lesson class,

to assign each lesson to a time slot and a room.

Because OptaPlanner changes these fields, Lesson is a planning entity:

Most of the fields in the previous diagram contain input data, except for the orange fields:

A lesson’s timeslot and room fields are unassigned (null) in the input data

and assigned (not null) in the output data.

OptaPlanner changes these fields during solving.

Such fields are called planning variables.

In order for OptaPlanner to recognize them,

both the timeslot and room fields require an @PlanningVariable annotation.

Their containing class, Lesson, requires an @PlanningEntity annotation.

Create the src/main/java/org/acme/schooltimetabling/domain/Lesson.java class:

package org.acme.schooltimetabling.domain;

import org.optaplanner.core.api.domain.entity.PlanningEntity;

import org.optaplanner.core.api.domain.lookup.PlanningId;

import org.optaplanner.core.api.domain.variable.PlanningVariable;

@PlanningEntity

public class Lesson {

@PlanningId

private Long id;

private String subject;

private String teacher;

private String studentGroup;

@PlanningVariable

private Timeslot timeslot;

@PlanningVariable

private Room room;

public Lesson() {

}

public Lesson(Long id, String subject, String teacher, String studentGroup) {

this.id = id;

this.subject = subject;

this.teacher = teacher;

this.studentGroup = studentGroup;

}

public Long getId() {

return id;

}

public String getSubject() {

return subject;

}

public String getTeacher() {

return teacher;

}

public String getStudentGroup() {

return studentGroup;

}

public Timeslot getTimeslot() {

return timeslot;

}

public void setTimeslot(Timeslot timeslot) {

this.timeslot = timeslot;

}

public Room getRoom() {

return room;

}

public void setRoom(Room room) {

this.room = room;

}

@Override

public String toString() {

return subject + "(" + id + ")";

}

}The Lesson class has an @PlanningEntity annotation,

so OptaPlanner knows that this class changes during solving

because it contains one or more planning variables.

The timeslot field has an @PlanningVariable annotation,

so OptaPlanner knows that it can change its value.

In order to find potential Timeslot instances to assign to this field,

OptaPlanner uses the variable type to connect to a value range provider

that provides a List<Timeslot> to pick from.

The room field also has an @PlanningVariable annotation, for the same reasons.

|

Determining the |

2.3.6. Define the constraints and calculate the score

A score represents the quality of a specific solution. The higher the better. OptaPlanner looks for the best solution, which is the solution with the highest score found in the available time. It might be the optimal solution.

Because this use case has hard and soft constraints,

use the HardSoftScore class to represent the score:

-

Hard constraints must not be broken. For example: A room can have at most one lesson at the same time.

-

Soft constraints should not be broken. For example: A teacher prefers to teach in a single room.

Hard constraints are weighted against other hard constraints. Soft constraints are weighted too, against other soft constraints. Hard constraints always outweigh soft constraints, regardless of their respective weights.

To calculate the score, you could implement an EasyScoreCalculator class:

public class TimeTableEasyScoreCalculator implements EasyScoreCalculator<TimeTable, HardSoftScore> {

@Override

public HardSoftScore calculateScore(TimeTable timeTable) {

List<Lesson> lessonList = timeTable.getLessonList();

int hardScore = 0;

for (Lesson a : lessonList) {

for (Lesson b : lessonList) {

if (a.getTimeslot() != null && a.getTimeslot().equals(b.getTimeslot())

&& a.getId() < b.getId()) {

// A room can accommodate at most one lesson at the same time.

if (a.getRoom() != null && a.getRoom().equals(b.getRoom())) {

hardScore--;

}

// A teacher can teach at most one lesson at the same time.

if (a.getTeacher().equals(b.getTeacher())) {

hardScore--;

}

// A student can attend at most one lesson at the same time.

if (a.getStudentGroup().equals(b.getStudentGroup())) {

hardScore--;

}

}

}

}

int softScore = 0;

// Soft constraints are only implemented in the optaplanner-quickstarts code

return HardSoftScore.of(hardScore, softScore);

}

}Unfortunately that does not scale well, because it is non-incremental: every time a lesson is assigned to a different time slot or room, all lessons are re-evaluated to calculate the new score.

Instead, create a src/main/java/org/acme/schooltimetabling/solver/TimeTableConstraintProvider.java class

to perform incremental score calculation.

It uses OptaPlanner’s ConstraintStream API which is inspired by Java Streams and SQL:

package org.acme.schooltimetabling.solver;

import org.acme.schooltimetabling.domain.Lesson;

import org.optaplanner.core.api.score.buildin.hardsoft.HardSoftScore;

import org.optaplanner.core.api.score.stream.Constraint;

import org.optaplanner.core.api.score.stream.ConstraintFactory;

import org.optaplanner.core.api.score.stream.ConstraintProvider;

import org.optaplanner.core.api.score.stream.Joiners;

public class TimeTableConstraintProvider implements ConstraintProvider {

@Override

public Constraint[] defineConstraints(ConstraintFactory constraintFactory) {

return new Constraint[] {

// Hard constraints

roomConflict(constraintFactory),

teacherConflict(constraintFactory),

studentGroupConflict(constraintFactory),

// Soft constraints are only implemented in the optaplanner-quickstarts code

};

}

private Constraint roomConflict(ConstraintFactory constraintFactory) {

// A room can accommodate at most one lesson at the same time.

// Select a lesson ...

return constraintFactory

.forEach(Lesson.class)

// ... and pair it with another lesson ...

.join(Lesson.class,

// ... in the same timeslot ...

Joiners.equal(Lesson::getTimeslot),

// ... in the same room ...

Joiners.equal(Lesson::getRoom),

// ... and the pair is unique (different id, no reverse pairs) ...

Joiners.lessThan(Lesson::getId))

// ... then penalize each pair with a hard weight.

.penalize(HardSoftScore.ONE_HARD)

.asConstraint("Room conflict");

}

private Constraint teacherConflict(ConstraintFactory constraintFactory) {

// A teacher can teach at most one lesson at the same time.

return constraintFactory.forEach(Lesson.class)

.join(Lesson.class,

Joiners.equal(Lesson::getTimeslot),

Joiners.equal(Lesson::getTeacher),

Joiners.lessThan(Lesson::getId))

.penalize(HardSoftScore.ONE_HARD)

.asConstraint("Teacher conflict");

}

private Constraint studentGroupConflict(ConstraintFactory constraintFactory) {

// A student can attend at most one lesson at the same time.

return constraintFactory.forEach(Lesson.class)

.join(Lesson.class,

Joiners.equal(Lesson::getTimeslot),

Joiners.equal(Lesson::getStudentGroup),

Joiners.lessThan(Lesson::getId))

.penalize(HardSoftScore.ONE_HARD)

.asConstraint("Student group conflict");

}

}The ConstraintProvider scales an order of magnitude better than the EasyScoreCalculator: O(n) instead of O(n²).

2.3.7. Gather the domain objects in a planning solution

A TimeTable wraps all Timeslot, Room, and Lesson instances of a single dataset.

Furthermore, because it contains all lessons, each with a specific planning variable state,

it is a planning solution and it has a score:

-

If lessons are still unassigned, then it is an uninitialized solution, for example, a solution with the score

-4init/0hard/0soft. -

If it breaks hard constraints, then it is an infeasible solution, for example, a solution with the score

-2hard/-3soft. -

If it adheres to all hard constraints, then it is a feasible solution, for example, a solution with the score

0hard/-7soft.

Create the src/main/java/org/acme/schooltimetabling/domain/TimeTable.java class:

package org.acme.schooltimetabling.domain;

import java.util.List;

import org.optaplanner.core.api.domain.solution.PlanningEntityCollectionProperty;

import org.optaplanner.core.api.domain.solution.PlanningScore;

import org.optaplanner.core.api.domain.solution.PlanningSolution;

import org.optaplanner.core.api.domain.solution.ProblemFactCollectionProperty;

import org.optaplanner.core.api.domain.valuerange.ValueRangeProvider;

import org.optaplanner.core.api.score.buildin.hardsoft.HardSoftScore;

@PlanningSolution

public class TimeTable {

@ValueRangeProvider

@ProblemFactCollectionProperty

private List<Timeslot> timeslotList;

@ValueRangeProvider

@ProblemFactCollectionProperty

private List<Room> roomList;

@PlanningEntityCollectionProperty

private List<Lesson> lessonList;

@PlanningScore

private HardSoftScore score;

public TimeTable() {

}

public TimeTable(List<Timeslot> timeslotList, List<Room> roomList, List<Lesson> lessonList) {

this.timeslotList = timeslotList;

this.roomList = roomList;

this.lessonList = lessonList;

}

public List<Timeslot> getTimeslotList() {

return timeslotList;

}

public List<Room> getRoomList() {

return roomList;

}

public List<Lesson> getLessonList() {

return lessonList;

}

public HardSoftScore getScore() {

return score;

}

}The TimeTable class has an @PlanningSolution annotation,

so OptaPlanner knows that this class contains all of the input and output data.

Specifically, this class is the input of the problem:

-

A

timeslotListfield with all time slots-

This is a list of problem facts, because they do not change during solving.

-

-

A

roomListfield with all rooms-

This is a list of problem facts, because they do not change during solving.

-

-

A

lessonListfield with all lessons-

This is a list of planning entities, because they change during solving.

-

Of each

Lesson:-

The values of the

timeslotandroomfields are typically stillnull, so unassigned. They are planning variables. -

The other fields, such as

subject,teacherandstudentGroup, are filled in. These fields are problem properties.

-

-

However, this class is also the output of the solution:

-

A

lessonListfield for which eachLessoninstance has non-nulltimeslotandroomfields after solving -

A

scorefield that represents the quality of the output solution, for example,0hard/-5soft

2.3.7.1. The value range providers

The timeslotList field is a value range provider.

It holds the Timeslot instances which OptaPlanner can pick from to assign to the timeslot field of Lesson instances.

The timeslotList field has an @ValueRangeProvider annotation to connect the @PlanningVariable with the @ValueRangeProvider,

by matching the type of the planning variable with the type returned by the value range provider.

Following the same logic, the roomList field also has an @ValueRangeProvider annotation.

2.3.7.2. The problem fact and planning entity properties

Furthermore, OptaPlanner needs to know which Lesson instances it can change

as well as how to retrieve the Timeslot and Room instances used for score calculation

by your TimeTableConstraintProvider.

The timeslotList and roomList fields have an @ProblemFactCollectionProperty annotation,

so your TimeTableConstraintProvider can select from those instances.

The lessonList has an @PlanningEntityCollectionProperty annotation,

so OptaPlanner can change them during solving

and your TimeTableConstraintProvider can select from those too.

2.3.8. Create the solver service

Now you are ready to put everything together and create a REST service.

But solving planning problems on REST threads causes HTTP timeout issues.

Therefore, the Quarkus extension injects a SolverManager instance,

which runs solvers in a separate thread pool

and can solve multiple datasets in parallel.

Create the src/main/java/org/acme/schooltimetabling/rest/TimeTableResource.java class:

package org.acme.schooltimetabling.rest;

import java.util.UUID;

import java.util.concurrent.ExecutionException;

import javax.inject.Inject;

import javax.ws.rs.POST;

import javax.ws.rs.Path;

import org.acme.schooltimetabling.domain.TimeTable;

import org.optaplanner.core.api.solver.SolverJob;

import org.optaplanner.core.api.solver.SolverManager;

@Path("/timeTable")

public class TimeTableResource {

@Inject

SolverManager<TimeTable, UUID> solverManager;

@POST

@Path("/solve")

public TimeTable solve(TimeTable problem) {

UUID problemId = UUID.randomUUID();

// Submit the problem to start solving

SolverJob<TimeTable, UUID> solverJob = solverManager.solve(problemId, problem);

TimeTable solution;

try {

// Wait until the solving ends

solution = solverJob.getFinalBestSolution();